Related Research Articles

Cognitive science is the interdisciplinary, scientific study of the mind and its processes with input from linguistics, psychology, neuroscience, philosophy, computer science/artificial intelligence, and anthropology. It examines the nature, the tasks, and the functions of cognition. Cognitive scientists study intelligence and behavior, with a focus on how nervous systems represent, process, and transform information. Mental faculties of concern to cognitive scientists include language, perception, memory, attention, reasoning, and emotion; to understand these faculties, cognitive scientists borrow from fields such as linguistics, psychology, artificial intelligence, philosophy, neuroscience, and anthropology. The typical analysis of cognitive science spans many levels of organization, from learning and decision to logic and planning; from neural circuitry to modular brain organization. One of the fundamental concepts of cognitive science is that "thinking can best be understood in terms of representational structures in the mind and computational procedures that operate on those structures."

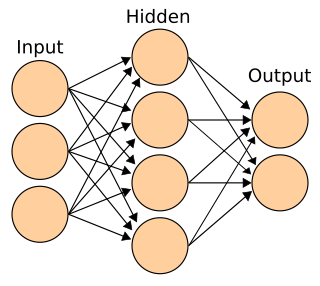

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains.

Connectionism refers to both an approach in the field of cognitive science that hopes to explain mental phenomena using artificial neural networks (ANN) and to a wide range of techniques and algorithms using ANNs in the context of artificial intelligence to build more intelligent machines. Connectionism presents a cognitive theory based on simultaneously occurring, distributed signal activity via connections that can be represented numerically, where learning occurs by modifying connection strengths based on experience.

Computational neuroscience is a branch of neuroscience which employs mathematical models, computer simulations, theoretical analysis and abstractions of the brain to understand the principles that govern the development, structure, physiology and cognitive abilities of the nervous system.

In artificial intelligence, symbolic artificial intelligence is the term for the collection of all methods in artificial intelligence research that are based on high-level symbolic (human-readable) representations of problems, logic and search. Symbolic AI used tools such as logic programming, production rules, semantic nets and frames, and it developed applications such as knowledge-based systems, symbolic mathematics, automated theorem provers, ontologies, the semantic web, and automated planning and scheduling systems. The Symbolic AI paradigm led to seminal ideas in search, symbolic programming languages, agents, multi-agent systems, the semantic web, and the strengths and limitations of formal knowledge and reasoning systems.

Neuromorphic engineering, also known as neuromorphic computing, is the use of electronic circuits to mimic neuro-biological architectures present in the nervous system. A neuromorphic computer/chip is any device that uses physical artificial neurons to do computations. In recent times, the term neuromorphic has been used to describe analog, digital, mixed-mode analog/digital VLSI, and software systems that implement models of neural systems. The implementation of neuromorphic computing on the hardware level can be realized by oxide-based memristors, spintronic memories, threshold switches, transistors, among others. Training software-based neuromorphic systems of spiking neural networks can be achieved using error backpropagation, e.g., using Python based frameworks such as snnTorch, or using canonical learning rules from the biological learning literature, e.g., using BindsNet.

The language of thought hypothesis (LOTH), sometimes known as thought ordered mental expression (TOME), is a view in linguistics, philosophy of mind and cognitive science, forwarded by American philosopher Jerry Fodor. It describes the nature of thought as possessing "language-like" or compositional structure. On this view, simple concepts combine in systematic ways to build thoughts. In its most basic form, the theory states that thought, like language, has syntax.

A recurrent neural network (RNN) is a class of artificial neural networks where connections between nodes can create a cycle, allowing output from some nodes to affect subsequent input to the same nodes. This allows it to exhibit temporal dynamic behavior. Derived from feedforward neural networks, RNNs can use their internal state (memory) to process variable length sequences of inputs. This makes them applicable to tasks such as unsegmented, connected handwriting recognition or speech recognition. Recurrent neural networks are theoretically Turing complete and can run arbitrary programs to process arbitrary sequences of inputs.

A neural circuit is a population of neurons interconnected by synapses to carry out a specific function when activated. Neural circuits interconnect to one another to form large scale brain networks.

A neural network is a network or circuit of biological neurons, or, in a modern sense, an artificial neural network, composed of artificial neurons or nodes. Thus, a neural network is either a biological neural network, made up of biological neurons, or an artificial neural network, used for solving artificial intelligence (AI) problems. The connections of the biological neuron are modeled in artificial neural networks as weights between nodes. A positive weight reflects an excitatory connection, while negative values mean inhibitory connections. All inputs are modified by a weight and summed. This activity is referred to as a linear combination. Finally, an activation function controls the amplitude of the output. For example, an acceptable range of output is usually between 0 and 1, or it could be −1 and 1.

Neurophilosophy or philosophy of neuroscience is the interdisciplinary study of neuroscience and philosophy that explores the relevance of neuroscientific studies to the arguments traditionally categorized as philosophy of mind. The philosophy of neuroscience attempts to clarify neuroscientific methods and results using the conceptual rigor and methods of philosophy of science.

In the field of artificial intelligence, neuro-fuzzy refers to combinations of artificial neural networks and fuzzy logic.

Neural computation is the information processing performed by networks of neurons. Neural computation is affiliated with the philosophical tradition known as Computational theory of mind, also referred to as computationalism, which advances the thesis that neural computation explains cognition. The first persons to propose an account of neural activity as being computational was Warren McCullock and Walter Pitts in their seminal 1943 paper, A Logical Calculus of the Ideas Immanent in Nervous Activity. There are three general branches of computationalism, including classicism, connectionism, and computational neuroscience. All three branches agree that cognition is computation, however, they disagree on what sorts of computations constitute cognition. The classicism tradition believes that computation in the brain is digital, analogous to digital computing. Both connectionism and computational neuroscience do not require that the computations that realize cognition are necessarily digital computations. However, the two branches greatly disagree upon which sorts of experimental data should be used to construct explanatory models of cognitive phenomena. Connectionists rely upon behavioral evidence to construct models to explain cognitive phenomena, whereas computational neuroscience leverages neuroanatomical and neurophysiological information to construct mathematical models that explain cognition.

For holographic data storage, Holographic associative memory (HAM) is an information storage and retrieval system based on the principles of holography. Holograms are made by using two beams of light, called a "reference beam" and an "object beam". They produce a pattern on the film that contains them both. Afterwards, by reproducing the reference beam, the hologram recreates a visual image of the original object. In theory, one could use the object beam to do the same thing: reproduce the original reference beam. In HAM, the pieces of information act like the two beams. Each can be used to retrieve the other from the pattern. It can be thought of as an artificial neural network which mimics the way the brain uses information. The information is presented in abstract form by a complex vector which may be expressed directly by a waveform possessing frequency and magnitude. This waveform is analogous to electrochemical impulses believed to transmit information between biological neuron cells.

Computational neurogenetic modeling (CNGM) is concerned with the study and development of dynamic neuronal models for modeling brain functions with respect to genes and dynamic interactions between genes. These include neural network models and their integration with gene network models. This area brings together knowledge from various scientific disciplines, such as computer and information science, neuroscience and cognitive science, genetics and molecular biology, as well as engineering.

Spiking neural networks (SNNs) are artificial neural networks that more closely mimic natural neural networks. In addition to neuronal and synaptic state, SNNs incorporate the concept of time into their operating model. The idea is that neurons in the SNN do not transmit information at each propagation cycle, but rather transmit information only when a membrane potential – an intrinsic quality of the neuron related to its membrane electrical charge – reaches a specific value, called the threshold. When the membrane potential reaches the threshold, the neuron fires, and generates a signal that travels to other neurons which, in turn, increase or decrease their potentials in response to this signal. A neuron model that fires at the moment of threshold crossing is also called a spiking neuron model.

Hierarchical temporal memory (HTM) is a biologically constrained machine intelligence technology developed by Numenta. Originally described in the 2004 book On Intelligence by Jeff Hawkins with Sandra Blakeslee, HTM is primarily used today for anomaly detection in streaming data. The technology is based on neuroscience and the physiology and interaction of pyramidal neurons in the neocortex of the mammalian brain.

Michelle Anne Mahowald was an American computational neuroscientist in the emerging field of neuromorphic engineering. In 1996 she was inducted into the Women in Technology International Hall of Fame for her development of the Silicon Eye and other computational systems. She committed suicide at age 33.

Network of human nervous system comprises nodes that are connected by links. The connectivity may be viewed anatomically, functionally, or electrophysiologically. These are presented in several Wikipedia articles that include Connectionism, Biological neural network, Artificial neural network, Computational neuroscience, as well as in several books by Ascoli, G. A. (2002), Sterratt, D., Graham, B., Gillies, A., & Willshaw, D. (2011), Gerstner, W., & Kistler, W. (2002), and Rumelhart, J. L., McClelland, J. L., and PDP Research Group (1986) among others. The focus of this article is a comprehensive view of modeling a neural network. Once an approach based on the perspective and connectivity is chosen, the models are developed at microscopic, mesoscopic, or macroscopic (system) levels. Computational modeling refers to models that are developed using computing tools.

Kwabena Adu Boahen is a Professor of Bioengineering and Electrical Engineering at Stanford University. He previously taught at the University of Pennsylvania.