Calculus is the mathematical study of continuous change, in the same way that geometry is the study of shape, and algebra is the study of generalizations of arithmetic operations.

In computability theory and computational complexity theory, a decision problem is a computational problem that can be posed as a yes–no question of the input values. An example of a decision problem is deciding by means of an algorithm whether a given natural number is prime. Another is the problem "given two numbers x and y, does x evenly divide y?". The answer is either 'yes' or 'no' depending upon the values of x and y. A method for solving a decision problem, given in the form of an algorithm, is called a decision procedure for that problem. A decision procedure for the decision problem "given two numbers x and y, does x evenly divide y?" would give the steps for determining whether x evenly divides y. One such algorithm is long division. If the remainder is zero the answer is 'yes', otherwise it is 'no'. A decision problem which can be solved by an algorithm is called decidable.

In mathematics, Gaussian elimination, also known as row reduction, is an algorithm for solving systems of linear equations. It consists of a sequence of row-wise operations performed on the corresponding matrix of coefficients. This method can also be used to compute the rank of a matrix, the determinant of a square matrix, and the inverse of an invertible matrix. The method is named after Carl Friedrich Gauss (1777–1855). To perform row reduction on a matrix, one uses a sequence of elementary row operations to modify the matrix until the lower left-hand corner of the matrix is filled with zeros, as much as possible. There are three types of elementary row operations:

In numerical analysis, Newton's method, also known as the Newton–Raphson method, named after Isaac Newton and Joseph Raphson, is a root-finding algorithm which produces successively better approximations to the roots of a real-valued function. The most basic version starts with a real-valued function f, its derivative f′, and an initial guess x0 for a root of f. If f satisfies certain assumptions and the initial guess is close, then

Dynamic programming is both a mathematical optimization method and an algorithmic paradigm. The method was developed by Richard Bellman in the 1950s and has found applications in numerous fields, from aerospace engineering to economics.

In mathematical optimization, the method of Lagrange multipliers is a strategy for finding the local maxima and minima of a function subject to equation constraints. It is named after the mathematician Joseph-Louis Lagrange.

In mathematics, an algebraic equation or polynomial equation is an equation of the form , where P is a polynomial with coefficients in some field, often the field of the rational numbers. For example, is an algebraic equation with integer coefficients and

In computer science, 2-satisfiability, 2-SAT or just 2SAT is a computational problem of assigning values to variables, each of which has two possible values, in order to satisfy a system of constraints on pairs of variables. It is a special case of the general Boolean satisfiability problem, which can involve constraints on more than two variables, and of constraint satisfaction problems, which can allow more than two choices for the value of each variable. But in contrast to those more general problems, which are NP-complete, 2-satisfiability can be solved in polynomial time.

In number theory, a congruence of squares is a congruence commonly used in integer factorization algorithms.

The quadratic sieve algorithm (QS) is an integer factorization algorithm and, in practice, the second-fastest method known. It is still the fastest for integers under 100 decimal digits or so, and is considerably simpler than the number field sieve. It is a general-purpose factorization algorithm, meaning that its running time depends solely on the size of the integer to be factored, and not on special structure or properties. It was invented by Carl Pomerance in 1981 as an improvement to Schroeppel's linear sieve.

In differential calculus, related rates problems involve finding a rate at which a quantity changes by relating that quantity to other quantities whose rates of change are known. The rate of change is usually with respect to time. Because science and engineering often relate quantities to each other, the methods of related rates have broad applications in these fields. Differentiation with respect to time or one of the other variables requires application of the chain rule, since most problems involve several variables.

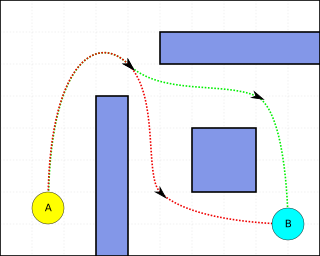

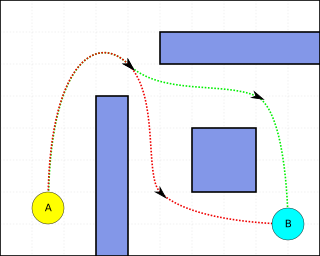

Pathfinding or pathing is the plotting, by a computer application, of the shortest route between two points. It is a more practical variant on solving mazes. This field of research is based heavily on Dijkstra's algorithm for finding the shortest path on a weighted graph.

In mathematics, specifically in elementary arithmetic and elementary algebra, given an equation between two fractions or rational expressions, one can cross-multiply to simplify the equation or determine the value of a variable.

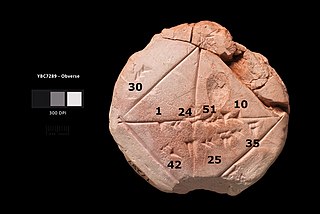

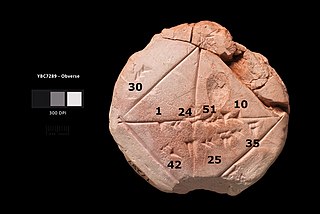

Babylonian mathematics is the mathematics developed or practiced by the people of Mesopotamia, from the days of the early Sumerians to the centuries following the fall of Babylon in 539 BC. Babylonian mathematical texts are plentiful and well edited. With respect to time they fall in two distinct groups: one from the Old Babylonian period, the other mainly Seleucid from the last three or four centuries BC. With respect to content, there is scarcely any difference between the two groups of texts. Babylonian mathematics remained constant, in character and content, for over a millennium.

In mathematical optimization, the ellipsoid method is an iterative method for minimizing convex functions over convex sets. The ellipsoid method generates a sequence of ellipsoids whose volume uniformly decreases at every step, thus enclosing a minimizer of a convex function.

Numerical linear algebra, sometimes called applied linear algebra, is the study of how matrix operations can be used to create computer algorithms which efficiently and accurately provide approximate answers to questions in continuous mathematics. It is a subfield of numerical analysis, and a type of linear algebra. Computers use floating-point arithmetic and cannot exactly represent irrational data, so when a computer algorithm is applied to a matrix of data, it can sometimes increase the difference between a number stored in the computer and the true number that it is an approximation of. Numerical linear algebra uses properties of vectors and matrices to develop computer algorithms that minimize the error introduced by the computer, and is also concerned with ensuring that the algorithm is as efficient as possible.

The rule of marteloio is a medieval technique of navigational computation that uses compass direction, distance and a simple trigonometric table known as the toleta de marteloio. The rule told mariners how to plot the traverse between two different navigation courses by means of resolving triangles with the help of the Toleta and basic arithmetic.

Jade Mirror of the Four Unknowns, Siyuan yujian (四元玉鉴), also referred to as Jade Mirror of the Four Origins, is a 1303 mathematical monograph by Yuan dynasty mathematician Zhu Shijie. Zhu advanced Chinese algebra with this Magnum opus.

Kuṭṭaka is an algorithm for finding integer solutions of linear Diophantine equations. A linear Diophantine equation is an equation of the form ax + by = c where x and y are unknown quantities and a, b, and c are known quantities with integer values. The algorithm was originally invented by the Indian astronomer-mathematician Āryabhaṭa and is described very briefly in his Āryabhaṭīya. Āryabhaṭa did not give the algorithm the name Kuṭṭaka, and his description of the method was mostly obscure and incomprehensible. It was Bhāskara I who gave a detailed description of the algorithm with several examples from astronomy in his Āryabhatiyabhāṣya, who gave the algorithm the name Kuṭṭaka. In Sanskrit, the word Kuṭṭaka means pulverization, and it indicates the nature of the algorithm. The algorithm in essence is a process where the coefficients in a given linear Diophantine equation are broken up into smaller numbers to get a linear Diophantine equation with smaller coefficients. In general, it is easy to find integer solutions of linear Diophantine equations with small coefficients. From a solution to the reduced equation, a solution to the original equation can be determined. Many Indian mathematicians after Aryabhaṭa have discussed the Kuṭṭaka method with variations and refinements. The Kuṭṭaka method was considered to be so important that the entire subject of algebra used to be called Kuṭṭaka-ganita or simply Kuṭṭaka. Sometimes the subject of solving linear Diophantine equations is also called Kuṭṭaka.