In probability theory and statistics, Bayes' theorem, named after Thomas Bayes, describes the probability of an event, based on prior knowledge of conditions that might be related to the event. For example, if the risk of developing health problems is known to increase with age, Bayes' theorem allows the risk to an individual of a known age to be assessed more accurately by conditioning it relative to their age, rather than assuming that the individual is typical of the population as a whole.

Bayesian inference is a method of statistical inference in which Bayes' theorem is used to update the probability for a hypothesis as more evidence or information becomes available. Fundamentally, Bayesian inference uses prior knowledge, in the form of a prior distribution in order to estimate posterior probabilities. Bayesian inference is an important technique in statistics, and especially in mathematical statistics. Bayesian updating is particularly important in the dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including science, engineering, philosophy, medicine, sport, and law. In the philosophy of decision theory, Bayesian inference is closely related to subjective probability, often called "Bayesian probability".

In probability theory, the law of large numbers (LLN) is a mathematical theorem that states that the average of the results obtained from a large number of independent and identical random samples converges to the true value, if it exists. More formally, the LLN states that given a sample of independent and identically distributed values, the sample mean converges to the true mean.

Vapnik–Chervonenkis theory was developed during 1960–1990 by Vladimir Vapnik and Alexey Chervonenkis. The theory is a form of computational learning theory, which attempts to explain the learning process from a statistical point of view.

Bayesian statistics is a theory in the field of statistics based on the Bayesian interpretation of probability, where probability expresses a degree of belief in an event. The degree of belief may be based on prior knowledge about the event, such as the results of previous experiments, or on personal beliefs about the event. This differs from a number of other interpretations of probability, such as the frequentist interpretation, which views probability as the limit of the relative frequency of an event after many trials. More concretely, analysis in Bayesian methods codifies prior knowledge in the form of a prior distribution.

In probability theory, the Borel–Kolmogorov paradox is a paradox relating to conditional probability with respect to an event of probability zero. It is named after Émile Borel and Andrey Kolmogorov.

A prior probability distribution of an uncertain quantity, often simply called the prior, is its assumed probability distribution before some evidence is taken into account. For example, the prior could be the probability distribution representing the relative proportions of voters who will vote for a particular politician in a future election. The unknown quantity may be a parameter of the model or a latent variable rather than an observable variable.

In probability theory and related fields, Malliavin calculus is a set of mathematical techniques and ideas that extend the mathematical field of calculus of variations from deterministic functions to stochastic processes. In particular, it allows the computation of derivatives of random variables. Malliavin calculus is also called the stochastic calculus of variations. P. Malliavin first initiated the calculus on infinite dimensional space. Then, the significant contributors such as S. Kusuoka, D. Stroock, J-M. Bismut, Shinzo Watanabe, I. Shigekawa, and so on finally completed the foundations.

In information theory, Shannon's source coding theorem establishes the statistical limits to possible data compression for data whose source is an independent identically-distributed random variable, and the operational meaning of the Shannon entropy.

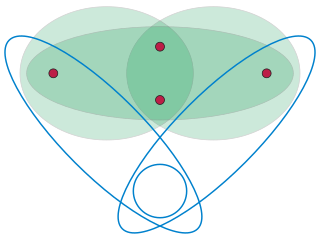

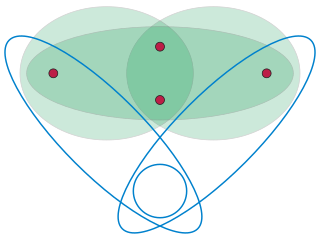

Common knowledge is a special kind of knowledge for a group of agents. There is common knowledge of p in a group of agents G when all the agents in G know p, they all know that they know p, they all know that they all know that they know p, and so on ad infinitum. It can be denoted as .

In information theory, the noisy-channel coding theorem, establishes that for any given degree of noise contamination of a communication channel, it is possible to communicate discrete data nearly error-free up to a computable maximum rate through the channel. This result was presented by Claude Shannon in 1948 and was based in part on earlier work and ideas of Harry Nyquist and Ralph Hartley.

In statistics, generalized least squares (GLS) is a method used to estimate the unknown parameters in a linear regression model. It is used when there is a non-zero amount of correlation between the residuals in the regression model. GLS is employed to improve statistical efficiency and reduce the risk of drawing erroneous inferences, as compared to conventional least squares and weighted least squares methods. It was first described by Alexander Aitken in 1935.

Exact division, also called consensus division, is a partition of a continuous resource ("cake") into some k pieces, such that each of n people with different tastes agree on the value of each of the pieces. For example, consider a cake which is half chocolate and half vanilla. Alice values only the chocolate and George values only the vanilla. The cake is divided into three pieces: one piece contains 20% of the chocolate and 20% of the vanilla, the second contains 50% of the chocolate and 50% of the vanilla, and the third contains the rest of the cake. This is an exact division (with k = 3 and n = 2), as both Alice and George value the three pieces as 20%, 50% and 30% respectively. Several common variants and special cases are known by different terms:

In probability theory, Lindeberg's condition is a sufficient condition for the central limit theorem (CLT) to hold for a sequence of independent random variables. Unlike the classical CLT, which requires that the random variables in question have finite variance and be both independent and identically distributed, Lindeberg's CLT only requires that they have finite variance, satisfy Lindeberg's condition, and be independent. It is named after the Finnish mathematician Jarl Waldemar Lindeberg.

Uniform convergence in probability is a form of convergence in probability in statistical asymptotic theory and probability theory. It means that, under certain conditions, the empirical frequencies of all events in a certain event-family converge to their theoretical probabilities. Uniform convergence in probability has applications to statistics as well as machine learning as part of statistical learning theory.

The exponential mechanism is a technique for designing differentially private algorithms. It was developed by Frank McSherry and Kunal Talwar in 2007. Their work was recognized as a co-winner of the 2009 PET Award for Outstanding Research in Privacy Enhancing Technologies.

In functional analysis, a branch of mathematics, a selection theorem is a theorem that guarantees the existence of a single-valued selection function from a given set-valued map. There are various selection theorems, and they are important in the theories of differential inclusions, optimal control, and mathematical economics.

In combinatorial mathematics and extremal set theory, the Sauer–Shelah lemma states that every family of sets with small VC dimension consists of a small number of sets. It is named after Norbert Sauer and Saharon Shelah, who published it independently of each other in 1972. The same result was also published slightly earlier and again independently, by Vladimir Vapnik and Alexey Chervonenkis, after whom the VC dimension is named. In his paper containing the lemma, Shelah gives credit also to Micha Perles, and for this reason the lemma has also been called the Perles–Sauer–Shelah lemma and the Sauer–Shelah–Perles lemma.

Bayesian hierarchical modelling is a statistical model written in multiple levels that estimates the parameters of the posterior distribution using the Bayesian method. The sub-models combine to form the hierarchical model, and Bayes' theorem is used to integrate them with the observed data and account for all the uncertainty that is present. The result of this integration is the posterior distribution, also known as the updated probability estimate, as additional evidence on the prior distribution is acquired.

Bayesian epistemology is a formal approach to various topics in epistemology that has its roots in Thomas Bayes' work in the field of probability theory. One advantage of its formal method in contrast to traditional epistemology is that its concepts and theorems can be defined with a high degree of precision. It is based on the idea that beliefs can be interpreted as subjective probabilities. As such, they are subject to the laws of probability theory, which act as the norms of rationality. These norms can be divided into static constraints, governing the rationality of beliefs at any moment, and dynamic constraints, governing how rational agents should change their beliefs upon receiving new evidence. The most characteristic Bayesian expression of these principles is found in the form of Dutch books, which illustrate irrationality in agents through a series of bets that lead to a loss for the agent no matter which of the probabilistic events occurs. Bayesians have applied these fundamental principles to various epistemological topics but Bayesianism does not cover all topics of traditional epistemology. The problem of confirmation in the philosophy of science, for example, can be approached through the Bayesian principle of conditionalization by holding that a piece of evidence confirms a theory if it raises the likelihood that this theory is true. Various proposals have been made to define the concept of coherence in terms of probability, usually in the sense that two propositions cohere if the probability of their conjunction is higher than if they were neutrally related to each other. The Bayesian approach has also been fruitful in the field of social epistemology, for example, concerning the problem of testimony or the problem of group belief. Bayesianism still faces various theoretical objections that have not been fully solved.