Related Research Articles

Supervised learning (SL) is a paradigm in machine learning where input objects and a desired output value train a model. The training data is processed, building a function that maps new data on expected output values. An optimal scenario will allow for the algorithm to correctly determine output values for unseen instances. This requires the learning algorithm to generalize from the training data to unseen situations in a "reasonable" way. This statistical quality of an algorithm is measured through the so-called generalization error.

In machine learning, a neural network is a model inspired by the structure and function of biological neural networks in animal brains.

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of statistical algorithms that can learn from data and generalize to unseen data, and thus perform tasks without explicit instructions. Recently, artificial neural networks have been able to surpass many previous approaches in performance.

Unsupervised learning is a method in machine learning where, in contrast to supervised learning, algorithms learn patterns exclusively from unlabeled data. Within such an approach, a machine learning model tries to find any similarities, differences, patterns, and structure in data by itself. No prior human intervention is needed.

Feature selection is the process of selecting a subset of relevant features for use in model construction. Stylometry and DNA microarray analysis are two cases where feature selection is used. It should be distinguished from feature extraction.

Waikato Environment for Knowledge Analysis (Weka) is a collection of machine learning and data analysis free software licensed under the GNU General Public License. It was developed at the University of Waikato, New Zealand and is the companion software to the book "Data Mining: Practical Machine Learning Tools and Techniques".

Meta learning is a subfield of machine learning where automatic learning algorithms are applied to metadata about machine learning experiments. As of 2017, the term had not found a standard interpretation, however the main goal is to use such metadata to understand how automatic learning can become flexible in solving learning problems, hence to improve the performance of existing learning algorithms or to learn (induce) the learning algorithm itself, hence the alternative term learning to learn.

A surrogate model is an engineering method used when an outcome of interest cannot be easily measured or computed, so an approximate mathematical model of the outcome is used instead. Most engineering design problems require experiments and/or simulations to evaluate design objective and constraint functions as a function of design variables. For example, in order to find the optimal airfoil shape for an aircraft wing, an engineer simulates the airflow around the wing for different shape variables. For many real-world problems, however, a single simulation can take many minutes, hours, or even days to complete. As a result, routine tasks such as design optimization, design space exploration, sensitivity analysis and "what-if" analysis become impossible since they require thousands or even millions of simulation evaluations.

In machine learning, a hyperparameter is a parameter, such as the learning rate or choice of optimizer, which specifies details of the learning process, hence the name hyperparameter. This is in contrast to parameters which determine the model itself.

Bayesian optimization is a sequential design strategy for global optimization of black-box functions that does not assume any functional forms. It is usually employed to optimize expensive-to-evaluate functions.

Feature engineering, a preprocessing step in supervised machine learning and statistical modeling, transforms raw data into a more effective set of inputs. Each input comprises several attributes, known as features. By providing models with relevant information, feature engineering significantly enhances their predictive accuracy and decision-making capability.

Algorithm selection is a meta-algorithmic technique to choose an algorithm from a portfolio on an instance-by-instance basis. It is motivated by the observation that on many practical problems, different algorithms have different performance characteristics. That is, while one algorithm performs well in some scenarios, it performs poorly in others and vice versa for another algorithm. If we can identify when to use which algorithm, we can optimize for each scenario and improve overall performance. This is what algorithm selection aims to do. The only prerequisite for applying algorithm selection techniques is that there exists a set of complementary algorithms.

The following outline is provided as an overview of and topical guide to machine learning:

In machine learning, hyperparameter optimization or tuning is the problem of choosing a set of optimal hyperparameters for a learning algorithm. A hyperparameter is a parameter whose value is used to control the learning process.

Neural architecture search (NAS) is a technique for automating the design of artificial neural networks (ANN), a widely used model in the field of machine learning. NAS has been used to design networks that are on par or outperform hand-designed architectures. Methods for NAS can be categorized according to the search space, search strategy and performance estimation strategy used:

In machine learning and statistics, the learning rate is a tuning parameter in an optimization algorithm that determines the step size at each iteration while moving toward a minimum of a loss function. Since it influences to what extent newly acquired information overrides old information, it metaphorically represents the speed at which a machine learning model "learns". In the adaptive control literature, the learning rate is commonly referred to as gain.

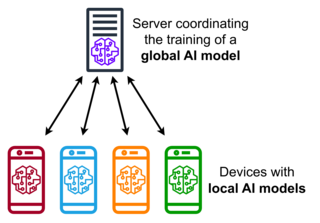

Federated learning is a sub-field of machine learning focusing on settings in which multiple entities collaboratively train a model while ensuring that their data remains decentralized. This stands in contrast to machine learning settings in which data is centrally stored. One of the primary defining characteristics of federated learning is data heterogeneity. Due to the decentralized nature of the clients' data, there is no guarantee that data samples held by each client are independently and identically distributed.

Automated Artificial Intelligence (AutoAI) is a variation of the automated machine learning or AutoML technology, which extends the automation of model building towards automation of the full life cycle of a machine learning model. It applies intelligent automation to the task of building predictive machine learning models by preparing data for training and identifying the best type of model for the given data. then choosing the features or columns of data that best support the problem the model is solving. Finally, automation evaluates a variety of tuning options to reach the best result as it generates, then ranks, model-candidate pipelines. The best performing pipelines can be put into production to process new data, and deliver predictions based on the model training. Automated artificial intelligence can also be applied to making sure the model doesn't have inherent bias and automating the tasks for continuous improvement of the model. Managing an AutoAI model requires frequent monitoring and updating, managed by a process known as model operations or ModelOps.

NNI is a free and open-source AutoML toolkit developed by Microsoft. It is used to automate feature engineering, model compression, neural architecture search, and hyper-parameter tuning.

Marius Lindauer is a German computer scientist and professor of machine learning at the institute of artificial intelligence of the Leibniz University Hannover. He is known for his research on Automated Machine Learning and other meta-algorithmic approaches.

References

- ↑ Thornton C, Hutter F, Hoos HH, Leyton-Brown K (2013). Auto-WEKA: Combined Selection and Hyperparameter Optimization of Classification Algorithms. KDD '13 Proceedings of the 19th ACM SIGKDD international conference on Knowledge discovery and data mining. pp. 847–855.

- 1 2 Hutter F, Caruana R, Bardenet R, Bilenko M, Guyon I, Kegl B, and Larochelle H. "AutoML 2014 @ ICML". AutoML 2014 Workshop @ ICML. Retrieved 2018-03-28.[ permanent dead link ]

- ↑ Olson, R.S., Urbanowicz, R.J., Andrews, P.C., Lavender, N.A., Kidd, L.C., Moore, J.H. (2016). Automating Biomedical Data Science Through Tree-Based Pipeline Optimization. In: Squillero, G., Burelli, P. (eds) Applications of Evolutionary Computation. EvoApplications 2016. Lecture Notes in Computer Science(), vol 9597. Springer, Cham. doi : 10.1007/978-3-319-31204-0_9

- ↑ De Bie, Tijl; De Raedt, Luc; Hernández-Orallo, José; Hoos, Holger H.; Smyth, Padhraic; Williams, Christopher K. I. (March 2022). "Automating Data Science". Communications of the ACM. 65 (3): 76–87. doi: 10.1145/3495256 . hdl: 10251/199907 .

- ↑ Erickson, Nick; Mueller, Jonas; Shirkov, Alexander; Zhang, Hang; Larroy, Pedro; Li, Mu; Smola, Alexander (2020-03-13). "AutoGluon-Tabular: Robust and Accurate AutoML for Structured Data". arXiv: 2003.06505 [stat.ML].

- ↑ Hutter, Frank; Kotthoff, Lars; Vanschoren, Joaquin, eds. (2019). Automated Machine Learning: Methods, Systems, Challenges. The Springer Series on Challenges in Machine Learning. Springer Nature. doi:10.1007/978-3-030-05318-5. hdl:20.500.12657/23012. ISBN 978-3-030-05317-8.

- ↑ Glover, Ellen (2018). "Machine Learning with Python: Clustering". Built in. doi:10.4135/9781526466426.

- ↑ "Meta Learning Challenges". metalearning.chalearn.org. Retrieved 2023-12-03.