Machine translation is use of either rule-based or probabilistic machine learning approaches to translation of text or speech from one language to another, including the contextual, idiomatic and pragmatic nuances of both languages.

In linguistics and natural language processing, a corpus or text corpus is a dataset, consisting of natively digital and older, digitalized, language resources, either annotated or unannotated.

Lexical semantics, as a subfield of linguistic semantics, is the study of word meanings. It includes the study of how words structure their meaning, how they act in grammar and compositionality, and the relationships between the distinct senses and uses of a word.

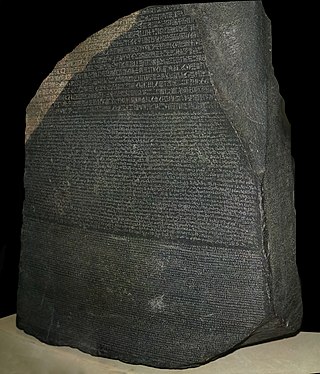

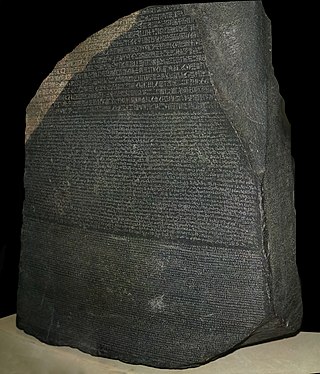

A parallel text is a text placed alongside its translation or translations. Parallel text alignment is the identification of the corresponding sentences in both halves of the parallel text. The Loeb Classical Library and the Clay Sanskrit Library are two examples of dual-language series of texts. Reference Bibles may contain the original languages and a translation, or several translations by themselves, for ease of comparison and study; Origen's Hexapla placed six versions of the Old Testament side by side. A famous example is the Rosetta Stone, whose discovery allowed the Ancient Egyptian language to begin being deciphered.

In corpus linguistics, a collocation is a series of words or terms that co-occur more often than would be expected by chance. In phraseology, a collocation is a type of compositional phraseme, meaning that it can be understood from the words that make it up. This contrasts with an idiom, where the meaning of the whole cannot be inferred from its parts, and may be completely unrelated.

Machine translation can use a method based on dictionary entries, which means that the words will be translated as a dictionary does – word by word, usually without much correlation of meaning between them. Dictionary lookups may be done with or without morphological analysis or lemmatisation. While this approach to machine translation is probably the least sophisticated, dictionary-based machine translation is ideally suitable for the translation of long lists of phrases on the subsentential level, e.g. inventories or simple catalogs of products and services.

Construction grammar is a family of theories within the field of cognitive linguistics which posit that constructions, or learned pairings of linguistic patterns with meanings, are the fundamental building blocks of human language. Constructions include words, morphemes, fixed expressions and idioms, and abstract grammatical rules such as the passive voice or the ditransitive. Any linguistic pattern is considered to be a construction as long as some aspect of its form or its meaning cannot be predicted from its component parts, or from other constructions that are recognized to exist. In construction grammar, every utterance is understood to be a combination of multiple different constructions, which together specify its precise meaning and form.

Statistical machine translation (SMT) was a machine translation approach, that superseded the previous, rule-based approach because it required explicit description of each and every linguistic rule, which was costly, and which often did not generalize to other languages. Since 2003, the statistical approach itself has been gradually superseded by the deep learning-based neural network approach.

Machine translation is a sub-field of computational linguistics that investigates the use of software to translate text or speech from one natural language to another.

In linguistics, grammaticality is determined by the conformity to language usage as derived by the grammar of a particular speech variety. The notion of grammaticality rose alongside the theory of generative grammar, the goal of which is to formulate rules that define well-formed, grammatical sentences. These rules of grammaticality also provide explanations of ill-formed, ungrammatical sentences.

Transfer-based machine translation is a type of machine translation (MT). It is currently one of the most widely used methods of machine translation. In contrast to the simpler direct model of MT, transfer MT breaks translation into three steps: analysis of the source language text to determine its grammatical structure, transfer of the resulting structure to a structure suitable for generating text in the target language, and finally generation of this text. Transfer-based MT systems are thus capable of using knowledge of the source and target languages.

Contrastive linguistics is a practice-oriented linguistic approach that seeks to describe the differences and similarities between a pair of languages.

Rule-based machine translation is machine translation systems based on linguistic information about source and target languages basically retrieved from dictionaries and grammars covering the main semantic, morphological, and syntactic regularities of each language respectively. Having input sentences, an RBMT system generates them to output sentences on the basis of morphological, syntactic, and semantic analysis of both the source and the target languages involved in a concrete translation task.

Syntactic bootstrapping is a theory in developmental psycholinguistics and language acquisition which proposes that children learn word meanings by recognizing syntactic categories and the structure of their language. It is proposed that children have innate knowledge of the links between syntactic and semantic categories and can use these observations to make inferences about word meaning. Learning words in one's native language can be challenging because the extralinguistic context of use does not give specific enough information about word meanings. Therefore, in addition to extralinguistic cues, conclusions about syntactic categories are made which then lead to inferences about a word's meaning. This theory aims to explain the acquisition of lexical categories such as verbs, nouns, etc. and functional categories such as case markers, determiners, etc.

The following outline is provided as an overview of and topical guide to natural-language processing:

Machine translation (MT) algorithms may be classified by their operating principle. MT may be based on a set of linguistic rules, or on large bodies (corpora) of already existing parallel texts. Rule-based methodologies may consist in a direct word-by-word translation, or operate via a more abstract representation of meaning: a representation either specific to the language pair, or a language-independent interlingua. Corpora-based methodologies rely on machine learning and may follow specific examples taken from the parallel texts, or may calculate statistical probabilities to select a preferred option out of all possible translations.

In the traditional grammar of Modern English, a phrasal verb typically constitutes a single semantic unit consisting of a verb followed by a particle, sometimes collocated with a preposition.

Sketch Engine is a corpus manager and text analysis software developed by Lexical Computing CZ s.r.o. since 2003. Its purpose is to enable people studying language behaviour to search large text collections according to complex and linguistically motivated queries. Sketch Engine gained its name after one of the key features, word sketches: one-page, automatic, corpus-derived summaries of a word's grammatical and collocational behaviour. Currently, it supports and provides corpora in 90+ languages.

Prosodic bootstrapping in linguistics refers to the hypothesis that learners of a primary language (L1) use prosodic features such as pitch, tempo, rhythm, amplitude, and other auditory aspects from the speech signal as a cue to identify other properties of grammar, such as syntactic structure. Acoustically signaled prosodic units in the stream of speech may provide critical perceptual cues by which infants initially discover syntactic phrases in their language. Although these features by themselves are not enough to help infants learn the entire syntax of their native language, they provide various cues about different grammatical properties of the language, such as identifying the ordering of heads and complements in the language using stress prominence, indicating the location of phrase boundaries, and word boundaries. It is argued that prosody of a language plays an initial role in the acquisition of the first language helping children to uncover the syntax of the language, mainly due to the fact that children are sensitive to prosodic cues at a very young age.

Reverso is a French company specialized in AI-based language tools, translation aids, and language services. These include online translation based on neural machine translation (NMT), contextual dictionaries, online bilingual concordances, grammar and spell checking and conjugation tools.