Corpus linguistics is an empirical method for the study of language by way of a text corpus. Corpora are balanced, often stratified collections of authentic, "real world", text of speech or writing that aim to represent a given linguistic variety. Today, corpora are generally machine-readable data collections.

Optical character recognition or optical character reader (OCR) is the electronic or mechanical conversion of images of typed, handwritten or printed text into machine-encoded text, whether from a scanned document, a photo of a document, a scene photo or from subtitle text superimposed on an image.

In linguistics and natural language processing, a corpus or text corpus is a dataset, consisting of natively digital and older, digitalized, language resources, either annotated or unannotated.

An n-gram is a sequence of n adjacent symbols in particular order. The symbols may be n adjacent letters, syllables, or rarely whole words found in a language dataset; or adjacent phonemes extracted from a speech-recording dataset, or adjacent base pairs extracted from a genome. They are collected from a text corpus or speech corpus. If Latin numerical prefixes are used, then n-gram of size 1 is called a "unigram", size 2 a "bigram" etc. If, instead of the Latin ones, the English cardinal numbers are furtherly used, then they are called "four-gram", "five-gram", etc. Similarly, using Greek numerical prefixes such as "monomer", "dimer", "trimer", "tetramer", "pentamer", etc., or English cardinal numbers, "one-mer", "two-mer", "three-mer", etc. are used in computational biology, for polymers or oligomers of a known size, called k-mers. When the items are words, n-grams may also be called shingles.

Google Books is a service from Google that searches the full text of books and magazines that Google has scanned, converted to text using optical character recognition (OCR), and stored in its digital database. Books are provided either by publishers and authors through the Google Books Partner Program, or by Google's library partners through the Library Project. Additionally, Google has partnered with a number of magazine publishers to digitize their archives.

Linguistic categories include

Internet linguistics is a domain of linguistics advocated by the English linguist David Crystal. It studies new language styles and forms that have arisen under the influence of the Internet and of other new media, such as Short Message Service (SMS) text messaging. Since the beginning of human–computer interaction (HCI) leading to computer-mediated communication (CMC) and Internet-mediated communication (IMC), experts, such as Gretchen McCulloch have acknowledged that linguistics has a contributing role in it, in terms of web interface and usability. Studying the emerging language on the Internet can help improve conceptual organization, translation and web usability. Such study aims to benefit both linguists and web users combined.

The International Corpus of English (ICE) is a set of text corpora representing varieties of English from around the world. Over twenty countries or groups of countries where English is the first language or an official second language are included.

The Corpus of Contemporary American English (COCA) is a one-billion-word corpus of contemporary American English. It was created by Mark Davies, retired professor of corpus linguistics at Brigham Young University (BYU).

A word list is a list of a language's lexicon within some given text corpus, serving the purpose of vocabulary acquisition. A lexicon sorted by frequency "provides a rational basis for making sure that learners get the best return for their vocabulary learning effort", but is mainly intended for course writers, not directly for learners. Frequency lists are also made for lexicographical purposes, serving as a sort of checklist to ensure that common words are not left out. Some major pitfalls are the corpus content, the corpus register, and the definition of "word". While word counting is a thousand years old, with still gigantic analysis done by hand in the mid-20th century, natural language electronic processing of large corpora such as movie subtitles has accelerated the research field.

Mark E. Davies is an American linguist. He specializes in corpus linguistics and language variation and change. He is the creator of most of the text corpora from English-Corpora.org as well as the Corpus del español and the Corpus do português. He has also created large datasets of word frequency, collocates, and n-grams data, which have been used by many large companies in the fields of technology and also language learning.

Culturomics is a form of computational lexicology that studies human behavior and cultural trends through the quantitative analysis of digitized texts. Researchers data mine large digital archives to investigate cultural phenomena reflected in language and word usage. The term is an American neologism first described in a 2010 Science article called Quantitative Analysis of Culture Using Millions of Digitized Books, co-authored by Harvard researchers Jean-Baptiste Michel and Erez Lieberman Aiden.

The following outline is provided as an overview of and topical guide to natural-language processing:

Computational social science is an interdisciplinary academic sub-field concerned with computational approaches to the social sciences. This means that computers are used to model, simulate, and analyze social phenomena. It has been applied in areas such as computational economics, computational sociology, computational media analysis, cliodynamics, culturomics, nonprofit studies. It focuses on investigating social and behavioral relationships and interactions using data science approaches, network analysis, social simulation and studies using interactive systems.

In natural language processing (NLP), a word embedding is a representation of a word. The embedding is used in text analysis. Typically, the representation is a real-valued vector that encodes the meaning of the word in such a way that the words that are closer in the vector space are expected to be similar in meaning. Word embeddings can be obtained using language modeling and feature learning techniques, where words or phrases from the vocabulary are mapped to vectors of real numbers.

Sketch Engine is a corpus manager and text analysis software developed by Lexical Computing since 2003. Its purpose is to enable people studying language behaviour to search large text collections according to complex and linguistically motivated queries. Sketch Engine gained its name after one of the key features, word sketches: one-page, automatic, corpus-derived summaries of a word's grammatical and collocational behaviour. Currently, it supports and provides corpora in over 90 languages.

Word2vec is a technique in natural language processing (NLP) for obtaining vector representations of words. These vectors capture information about the meaning of the word based on the surrounding words. The word2vec algorithm estimates these representations by modeling text in a large corpus. Once trained, such a model can detect synonymous words or suggest additional words for a partial sentence. Word2vec was developed by Tomáš Mikolov and colleagues at Google and published in 2013.

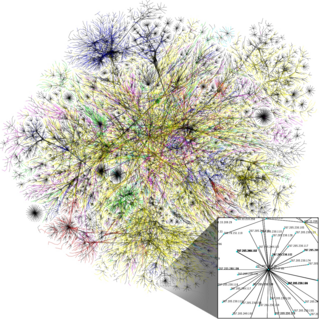

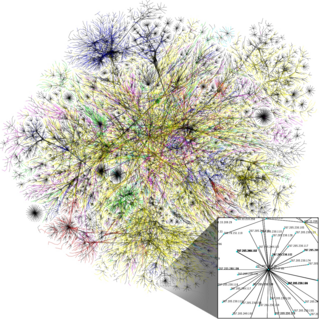

In natural language processing, linguistics, and neighboring fields, Linguistic Linked Open Data (LLOD) describes a method and an interdisciplinary community concerned with creating, sharing, and (re-)using language resources in accordance with Linked Data principles. The Linguistic Linked Open Data Cloud was conceived and is being maintained by the Open Linguistics Working Group (OWLG) of the Open Knowledge Foundation, but has been a point of focal activity for several W3C community groups, research projects, and infrastructure efforts since then.

The TenTen Corpus Family (also called TenTen corpora) is a set of comparable web text corpora, i.e. collections of texts that have been crawled from the World Wide Web and processed to match the same standards. These corpora are made available through the Sketch Engine corpus manager. There are TenTen corpora for more than 35 languages. Their target size is 10 billion (1010) words per language, which gave rise to the corpus family's name.