Notes

- ↑ This value, approximately 10⁄3, but slightly less, can be understood simply because : 3 decimal digits are slightly less information than 10 binary digits, so 1 decimal digit is slightly less than 10⁄3 binary digits.

| Units of information |

| Information-theoretic |

|---|

| Data storage |

| Quantum information |

The hartley (symbol Hart), also called a ban, or a dit (short for decimal digit), [1] [2] [3] is a logarithmic unit that measures information or entropy, based on base 10 logarithms and powers of 10. One hartley is the information content of an event if the probability of that event occurring is 1⁄10. [4] It is therefore equal to the information contained in one decimal digit (or dit), assuming a priori equiprobability of each possible value. It is named after Ralph Hartley.

If base 2 logarithms and powers of 2 are used instead, then the unit of information is the shannon or bit, which is the information content of an event if the probability of that event occurring is 1⁄2. Natural logarithms and powers of e define the nat.

One ban corresponds to ln(10) nat = log2(10) Sh, or approximately 2.303 nat, or 3.322 bit (3.322 Sh). [lower-alpha 1] A deciban is one tenth of a ban (or about 0.332 Sh); the name is formed from ban by the SI prefix deci- .

Though there is no associated SI unit, information entropy is part of the International System of Quantities, defined by International Standard IEC 80000-13 of the International Electrotechnical Commission.

The term hartley is named after Ralph Hartley, who suggested in 1928 to measure information using a logarithmic base equal to the number of distinguishable states in its representation, which would be the base 10 for a decimal digit. [5] [6]

The ban and the deciban were invented by Alan Turing with Irving John "Jack" Good in 1940, to measure the amount of information that could be deduced by the codebreakers at Bletchley Park using the Banburismus procedure, towards determining each day's unknown setting of the German naval Enigma cipher machine. The name was inspired by the enormous sheets of card, printed in the town of Banbury about 30 miles away, that were used in the process. [7]

Good argued that the sequential summation of decibans to build up a measure of the weight of evidence in favour of a hypothesis, is essentially Bayesian inference. [7] Donald A. Gillies, however, argued the ban is, in effect, the same as Karl Popper's measure of the severity of a test. [8]

The deciban is a particularly useful unit for log-odds, notably as a measure of information in Bayes factors, odds ratios (ratio of odds, so log is difference of log-odds), or weights of evidence. 10 decibans corresponds to odds of 10:1; 20 decibans to 100:1 odds, etc. According to Good, a change in a weight of evidence of 1 deciban (i.e., a change in the odds from evens to about 5:4) is about as finely as humans can reasonably be expected to quantify their degree of belief in a hypothesis. [9]

Odds corresponding to integer decibans can often be well-approximated by simple integer ratios; these are collated below. Value to two decimal places, simple approximation (to within about 5%), with more accurate approximation (to within 1%) if simple one is inaccurate:

| decibans | exact value | approx. value | approx. ratio | accurate ratio | probability |

|---|---|---|---|---|---|

| 0 | 100/10 | 1 | 1:1 | 50% | |

| 1 | 101/10 | 1.26 | 5:4 | 56% | |

| 2 | 102/10 | 1.58 | 3:2 | 8:5 | 61% |

| 3 | 103/10 | 2.00 | 2:1 | 67% | |

| 4 | 104/10 | 2.51 | 5:2 | 71.5% | |

| 5 | 105/10 | 3.16 | 3:1 | 19:6, 16:5 | 76% |

| 6 | 106/10 | 3.98 | 4:1 | 80% | |

| 7 | 107/10 | 5.01 | 5:1 | 83% | |

| 8 | 108/10 | 6.31 | 6:1 | 19:3, 25:4 | 86% |

| 9 | 109/10 | 7.94 | 8:1 | 89% | |

| 10 | 1010/10 | 10 | 10:1 | 91% |

The bit is the most basic unit of information in computing and digital communications. The name is a portmanteau of binary digit. The bit represents a logical state with one of two possible values. These values are most commonly represented as either "1" or "0", but other representations such as true/false, yes/no, +/−, or on/off are commonly used.

In the computer science subfield of algorithmic information theory, a Chaitin constant or halting probability is a real number that, informally speaking, represents the probability that a randomly constructed program will halt. These numbers are formed from a construction due to Gregory Chaitin.

The number e, also known as Euler's number, is a mathematical constant approximately equal to 2.71828 which can be characterized in many ways. It is the base of the natural logarithms. It is the limit of (1 + 1/n)n as n approaches infinity, an expression that arises in the study of compound interest. It can also be calculated as the sum of the infinite series

Information theory is the scientific study of the quantification, storage, and communication of digital information. The field was fundamentally established by the works of Harry Nyquist and Ralph Hartley, in the 1920s, and Claude Shannon in the 1940s. The field is at the intersection of probability theory, statistics, computer science, statistical mechanics, information engineering, and electrical engineering.

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable , with possible outcomes , which occur with probability the entropy of is formally defined as:

In mathematics, the logarithm is the inverse function to exponentiation. That means the logarithm of a given number x is the exponent to which another fixed number, the base b, must be raised, to produce that number x. In the simplest case, the logarithm counts the number of occurrences of the same factor in repeated multiplication; e.g. since 1000 = 10 × 10 × 10 = 103, the "logarithm base 10" of 1000 is 3, or log10 (1000) = 3. The logarithm of x to base b is denoted as logb (x), or without parentheses, logb x, or even without the explicit base, log x, when no confusion is possible, or when the base does not matter such as in big O notation.

Benford's law, also known as the Newcomb–Benford law, the law of anomalous numbers, or the first-digit law, is an observation that in many real-life sets of numerical data, the leading digit is likely to be small. In sets that obey the law, the number 1 appears as the leading significant digit about 30 % of the time, while 9 appears as the leading significant digit less than 5 % of the time. If the digits were distributed uniformly, they would each occur about 11.1 % of the time. Benford's law also makes predictions about the distribution of second digits, third digits, digit combinations, and so on.

In information theory, the Shannon–Hartley theorem tells the maximum rate at which information can be transmitted over a communications channel of a specified bandwidth in the presence of noise. It is an application of the noisy-channel coding theorem to the archetypal case of a continuous-time analog communications channel subject to Gaussian noise. The theorem establishes Shannon's channel capacity for such a communication link, a bound on the maximum amount of error-free information per time unit that can be transmitted with a specified bandwidth in the presence of the noise interference, assuming that the signal power is bounded, and that the Gaussian noise process is characterized by a known power or power spectral density. The law is named after Claude Shannon and Ralph Hartley.

A logarithmic scale is a way of displaying numerical data over a very wide range of values in a compact way—typically the largest numbers in the data are hundreds or even thousands of times larger than the smallest numbers. Such a scale is nonlinear: the numbers 10 and 20, and 60 and 70, are not the same distance apart on a log scale. Rather, the numbers 10 and 100, and 60 and 600 are equally spaced. Thus moving a unit of distance along the scale means the number has been multiplied by 10. Often exponential growth curves are displayed on a log scale, otherwise they would increase too quickly to fit within a small graph. Another way to think about it is that the number of digits of the data grows at a constant rate. For example, the numbers 10, 100, 1000, and 10000 are equally spaced on a log scale, because their numbers of digits is going up by 1 each time: 2, 3, 4, and 5 digits. In this way, adding two digits multiplies the quantity measured on the log scale by a factor of 100.

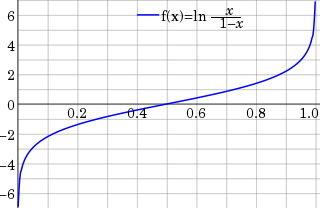

In statistics, the logit function is the quantile function associated with the standard logistic distribution. It has many uses in data analysis and machine learning, especially in data transformations.

Odds provide a measure of the likelihood of a particular outcome. They are calculated as the ratio of the number of events that produce that outcome to the number that do not. Odds are commonly used in gambling and statistics.

In mathematics, the binary logarithm is the power to which the number 2 must be raised to obtain the value n. That is, for any real number x,

In information theory, the information content, self-information, surprisal, or Shannon information is a basic quantity derived from the probability of a particular event occurring from a random variable. It can be thought of as an alternative way of expressing probability, much like odds or log-odds, but which has particular mathematical advantages in the setting of information theory.

The natural unit of information, sometimes also nit or nepit, is a unit of information, based on natural logarithms and powers of e, rather than the powers of 2 and base 2 logarithms, which define the shannon. This unit is also known by its unit symbol, the nat. One nat is the information content of an event when the probability of that event occurring is 1/e.

The Hartley function is a measure of uncertainty, introduced by Ralph Hartley in 1928. If a sample from a finite set A uniformly at random is picked, the information revealed after the outcome is known is given by the Hartley function

The decisive event which established the discipline of information theory, and brought it to immediate worldwide attention, was the publication of Claude E. Shannon's classic paper "A Mathematical Theory of Communication" in the Bell System Technical Journal in July and October 1948.

Statistical inference might be thought of as gambling theory applied to the world around us. The myriad applications for logarithmic information measures tell us precisely how to take the best guess in the face of partial information. In that sense, information theory might be considered a formal expression of the theory of gambling. It is no surprise, therefore, that information theory has applications to games of chance.

ISO 80000 or IEC 80000 is an international standard introducing the International System of Quantities (ISQ). It was developed and promulgated jointly by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC).

In computing and telecommunications, a unit of information is the capacity of some standard data storage system or communication channel, used to measure the capacities of other systems and channels. In information theory, units of information are also used to measure information contained in messages and the entropy of random variables.

Single-precision floating-point format is a computer number format, usually occupying 32 bits in computer memory; it represents a wide dynamic range of numeric values by using a floating radix point.