Related Research Articles

Evidence-based medicine (EBM) is "the conscientious, explicit and judicious use of current best evidence in making decisions about the care of individual patients." The aim of EBM is to integrate the experience of the clinician, the values of the patient, and the best available scientific information to guide decision-making about clinical management. The term was originally used to describe an approach to teaching the practice of medicine and improving decisions by individual physicians about individual patients.

A randomized controlled trial is a form of scientific experiment used to control factors not under direct experimental control. Examples of RCTs are clinical trials that compare the effects of drugs, surgical techniques, medical devices, diagnostic procedures, diets or other medical treatments.

In its most common sense, methodology is the study of research methods. However, the term can also refer to the methods themselves or to the philosophical discussion of associated background assumptions. A method is a structured procedure for bringing about a certain goal, like acquiring knowledge or verifying knowledge claims. This normally involves various steps, like choosing a sample, collecting data from this sample, and interpreting the data. The study of methods concerns a detailed description and analysis of these processes. It includes evaluative aspects by comparing different methods. This way, it is assessed what advantages and disadvantages they have and for what research goals they may be used. These descriptions and evaluations depend on philosophical background assumptions. Examples are how to conceptualize the studied phenomena and what constitutes evidence for or against them. When understood in the widest sense, methodology also includes the discussion of these more abstract issues.

Medical education is education related to the practice of being a medical practitioner, including the initial training to become a physician and additional training thereafter.

Evidence-based policy is a concept in public policy that advocates for policy decisions to be grounded on, or influenced by, rigorously established objective evidence. This concept presents a stark contrast to policymaking predicated on ideology, 'common sense,' anecdotes, or personal intuitions. The methodology employed in evidence-based policy often includes comprehensive research methods such as randomized controlled trials (RCT). Good data, analytical skills, and political support to the use of scientific information are typically seen as the crucial elements of an evidence-based approach.

A systematic review is a scholarly synthesis of the evidence on a clearly presented topic using critical methods to identify, define and assess research on the topic. A systematic review extracts and interprets data from published studies on the topic, then analyzes, describes, critically appraises and summarizes interpretations into a refined evidence-based conclusion. For example, a systematic review of randomized controlled trials is a way of summarizing and implementing evidence-based medicine.

Evidence-based practice is the idea that occupational practices ought to be based on scientific evidence. The movement towards evidence-based practices attempts to encourage and, in some instances, require professionals and other decision-makers to pay more attention to evidence to inform their decision-making. The goal of evidence-based practice is to eliminate unsound or outdated practices in favor of more-effective ones by shifting the basis for decision making from tradition, intuition, and unsystematic experience to firmly grounded scientific research. The proposal has been controversial, with some arguing that results may not specialize to individuals as well as traditional practices.

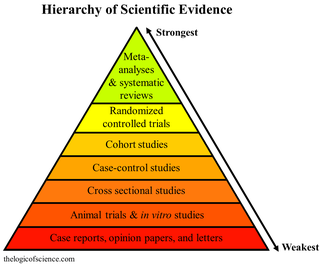

A hierarchy of evidence, comprising levels of evidence (LOEs), that is, evidence levels (ELs), is a heuristic used to rank the relative strength of results obtained from experimental research, especially medical research. There is broad agreement on the relative strength of large-scale, epidemiological studies. More than 80 different hierarchies have been proposed for assessing medical evidence. The design of the study and the endpoints measured affect the strength of the evidence. In clinical research, the best evidence for treatment efficacy is mainly from meta-analyses of randomized controlled trials (RCTs). Systematic reviews of completed, high-quality randomized controlled trials – such as those published by the Cochrane Collaboration – rank the same as systematic review of completed high-quality observational studies in regard to the study of side effects. Evidence hierarchies are often applied in evidence-based practices and are integral to evidence-based medicine (EBM).

Trevor A. Sheldon is a British academic and University administrator who is a former Deputy Vice-Chancellor of the University of York and Dean of Hull York Medical School. He has held academic posts at the University of York, the University of Leeds, the University of Leicester and Kingston University.

A research question is "a question that a research project sets out to answer". Choosing a research question is an essential element of both quantitative and qualitative research. Investigation will require data collection and analysis, and the methodology for this will vary widely. Good research questions seek to improve knowledge on an important topic, and are usually narrow and specific.

Normalization process theory (NPT) is a sociological theory, generally used in the fields of science and technology studies (STS), implementation research, and healthcare system research. The theory deals with the adoption of technological and organizational innovations into systems, recent studies have utilized this theory in evaluating new practices in social care and education settings. It was developed out of the normalization process model.

Patient participation is a trend that arose in answer to medical paternalism. Informed consent is a process where patients make decisions informed by the advice of medical professionals.

Critical appraisal in evidence based medicine, is the use of explicit, transparent methods to assess the data in published research, applying the rules of evidence to factors such as internal validity, adherence to reporting standards, conclusions, generalizability and risk-of-bias. Critical appraisal methods form a central part of the systematic review process. They are used in evidence synthesis to assist clinical decision-making, and are increasingly used in evidence-based social care and education provision.

The Enhancing the Quality and Transparency of health research Network is an international initiative aimed at promoting transparent and accurate reporting of health research studies to enhance the value and reliability of medical research literature. The EQUATOR Network is hosted by the University of Oxford, and was established with the goals of raising awareness of the importance of good reporting of research, assisting in the development, dissemination and implementation of reporting guidelines for different types of study designs, monitoring the status of the quality of reporting of research studies in the health sciences literature, and conducting research relating to issues that impact the quality of reporting of health research studies. The Network acts as an "umbrella" organisation, bringing together developers of reporting guidelines, medical journal editors and peer reviewers, research funding bodies, and other key stakeholders with a mutual interest in improving the quality of research publications and research itself. The EQUATOR Network comprises five centres at the University of Oxford, Bond University, Paris Descartes University, Ottawa Hospital Research Institute, and Hong Kong Baptiste University.

Realist evaluation or realist review is a type of theory-driven evaluation method used in evaluating social programmes. It is based on the epistemological foundations of critical realism, though one of the originators of realist evaluation, Ray Pawson, who was "initially impressed" by how critical realism explains generative causation in experimental science, later criticised its "philosophical grandstanding" and "explain-all Marxism". Based on specific theories, realist evaluation provides an alternative lens to empiricist evaluation techniques for the study and understanding of programmes and policies. This technique assumes that knowledge is a social and historical product, thus the social and political context as well as theoretical mechanisms, need consideration in analysis of programme or policy effectiveness.

Health care quality is a level of value provided by any health care resource, as determined by some measurement. As with quality in other fields, it is an assessment of whether something is good enough and whether it is suitable for its purpose. The goal of health care is to provide medical resources of high quality to all who need them; that is, to ensure good quality of life, cure illnesses when possible, to extend life expectancy, and so on. Researchers use a variety of quality measures to attempt to determine health care quality, including counts of a therapy's reduction or lessening of diseases identified by medical diagnosis, a decrease in the number of risk factors which people have following preventive care, or a survey of health indicators in a population who are accessing certain kinds of care.

The Centre for Evidence-Based Medicine (CEBM), based in the Nuffield Department of Primary Care Health Sciences at the University of Oxford, is an academic-led centre dedicated to the practice, teaching, and dissemination of high quality evidence-based medicine to improve healthcare in everyday clinical practice. CEBM was founded by David Sackett in 1995. It was subsequently directed by Brian Haynes and Paul Glasziou. Since 2010 it has been led by Professor Carl Heneghan, a clinical epidemiologist and general practitioner.

WHO-CHOICE is an initiative started by the World Health Organization in 1998 to help countries choose their healthcare priorities. It is an example of priority-setting in global health. It was one of the earliest projects to perform sectoral cost-effectiveness analyses on a global scale. Findings from WHO-CHOICE have shaped the World Health Report of 2002, been published in the British Medical Journal in 2012, and been cited by charity evaluators and academics alongside DCP2 and the Copenhagen Consensus.

Journalology is the scholarly study of all aspects of the academic publishing process. The field seeks to improve the quality of scholarly research by implementing evidence-based practices in academic publishing. The term "journalology" was coined by Stephen Lock, the former editor-in-chief of the BMJ. The first Peer Review Congress, held in 1989 in Chicago, Illinois, is considered a pivotal moment in the founding of journalology as a distinct field. The field of journalology has been influential in pushing for study pre-registration in science, particularly in clinical trials. Clinical trial registration is now expected in most countries. Journalology researchers also work to reform the peer review process.

ReSPECT stands for Recommended Summary Plan for Emergency Care and Treatment. It is an emergency care and treatment plan (ECTP) used in parts of the United Kingdom, in which personalized recommendations for future emergency clinical care and treatment are created through discussion between health care professionals and a person. These recommendations are then documented on a ReSPECT form.

References

- ↑ Hussey PS, Anderson GF, Osborn R, Feek C, McLaughlin V, Millar J, Epstein A (2006-02-22). "How does the quality of care compare in five countries?". Health Affairs. 23 (3): 89–99. doi: 10.1186/1748-5908-1-1 . PMC 1436009 . PMID 15160806.

- 1 2 3 4 Peters DH, Adam T, Alonge O, Agyepong IA, Tran N (April 2014). "Republished research: Implementation research: what it is and how to do it: implementation research is a growing but not well understood field of health research that can contribute to more effective public health and clinical policies and programmes. This article provides a broad definition of implementation research and outlines key principles for how to do it". British Journal of Sports Medicine. 48 (8): 731–736. doi:10.1136/bmj.f6753. PMID 24659611. S2CID 52862104.

- ↑ WHO TDE. "Implementation research toolkit" (PDF). WHO TDR. Retrieved 14 March 2017.

- 1 2 Mazzucca S, Tabak RG, Pilar M, Ramsey AT, Baumann AA, Kryzer E, et al. (2018). "Variation in Research Designs Used to Test the Effectiveness of Dissemination and Implementation Strategies: A Review". Frontiers in Public Health. 6: 32. doi: 10.3389/fpubh.2018.00032 . OCLC 7655581063. PMC 5826311 . PMID 29515989.

- ↑ Pinnock H, Barwick M, Carpenter CR, Eldridge S, Grandes G, Griffiths CJ, et al. (March 2017). "Standards for Reporting Implementation Studies (StaRI) Statement". BMJ. 356: i6795. doi:10.1136/bmj.i6795. PMC 5421438 . PMID 28264797.