Cognitive science is the interdisciplinary, scientific study of the mind and its processes. It examines the nature, the tasks, and the functions of cognition. Mental faculties of concern to cognitive scientists include language, perception, memory, attention, reasoning, and emotion; to understand these faculties, cognitive scientists borrow from fields such as linguistics, psychology, artificial intelligence, philosophy, neuroscience, and anthropology. The typical analysis of cognitive science spans many levels of organization, from learning and decision to logic and planning; from neural circuitry to modular brain organization. One of the fundamental concepts of cognitive science is that "thinking can best be understood in terms of representational structures in the mind and computational procedures that operate on those structures."

Neuroscience is the scientific study of the nervous system, its functions and disorders. It is a multidisciplinary science that combines physiology, anatomy, molecular biology, developmental biology, cytology, psychology, physics, computer science, chemistry, medicine, statistics, and mathematical modeling to understand the fundamental and emergent properties of neurons, glia and neural circuits. The understanding of the biological basis of learning, memory, behavior, perception, and consciousness has been described by Eric Kandel as the "epic challenge" of the biological sciences.

Cognitive neuroscience is the scientific field that is concerned with the study of the biological processes and aspects that underlie cognition, with a specific focus on the neural connections in the brain which are involved in mental processes. It addresses the questions of how cognitive activities are affected or controlled by neural circuits in the brain. Cognitive neuroscience is a branch of both neuroscience and psychology, overlapping with disciplines such as behavioral neuroscience, cognitive psychology, physiological psychology and affective neuroscience. Cognitive neuroscience relies upon theories in cognitive science coupled with evidence from neurobiology, and computational modeling.

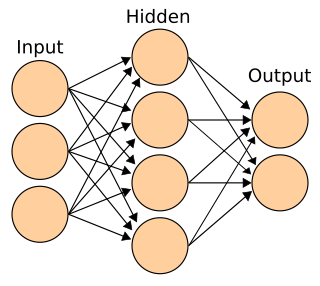

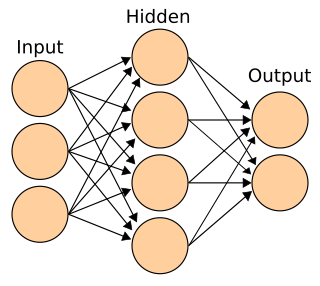

Connectionism is the name of an approach to the study of human mental processes and cognition that utilizes mathematical models known as connectionist networks or artificial neural networks. Connectionism has had many 'waves' since its beginnings.

Computational neuroscience is a branch of neuroscience which employs mathematics, computer science, theoretical analysis and abstractions of the brain to understand the principles that govern the development, structure, physiology and cognitive abilities of the nervous system.

Bio-inspired computing, short for biologically inspired computing, is a field of study which seeks to solve computer science problems using models of biology. It relates to connectionism, social behavior, and emergence. Within computer science, bio-inspired computing relates to artificial intelligence and machine learning. Bio-inspired computing is a major subset of natural computation.

James Lloyd "Jay" McClelland, FBA is the Lucie Stern Professor at Stanford University, where he was formerly the chair of the Psychology Department. He is best known for his work on statistical learning and Parallel Distributed Processing, applying connectionist models to explain cognitive phenomena such as spoken word recognition and visual word recognition. McClelland is to a large extent responsible for the large increase in scientific interest in connectionism in the 1980s.

A neural circuit is a population of neurons interconnected by synapses to carry out a specific function when activated. Multiple neural circuits interconnect with one another to form large scale brain networks.

Computational cognition is the study of the computational basis of learning and inference by mathematical modeling, computer simulation, and behavioral experiments. In psychology, it is an approach which develops computational models based on experimental results. It seeks to understand the basis behind the human method of processing of information. Early on computational cognitive scientists sought to bring back and create a scientific form of Brentano's psychology.

Neurophilosophy or philosophy of neuroscience is the interdisciplinary study of neuroscience and philosophy that explores the relevance of neuroscientific studies to the arguments traditionally categorized as philosophy of mind. The philosophy of neuroscience attempts to clarify neuroscientific methods and results using the conceptual rigor and methods of philosophy of science.

David Everett Rumelhart was an American psychologist who made many contributions to the formal analysis of human cognition, working primarily within the frameworks of mathematical psychology, symbolic artificial intelligence, and parallel distributed processing. He also admired formal linguistic approaches to cognition, and explored the possibility of formulating a formal grammar to capture the structure of stories.

Neuroinformatics is the emergent field that combines informatics and neuroscience. Neuroinformatics is related with neuroscience data and information processing by artificial neural networks. There are three main directions where neuroinformatics has to be applied:

Neural computation is the information processing performed by networks of neurons. Neural computation is affiliated with the philosophical tradition known as Computational theory of mind, also referred to as computationalism, which advances the thesis that neural computation explains cognition. The first persons to propose an account of neural activity as being computational was Warren McCullock and Walter Pitts in their seminal 1943 paper, A Logical Calculus of the Ideas Immanent in Nervous Activity.

Computational neurogenetic modeling (CNGM) is concerned with the study and development of dynamic neuronal models for modeling brain functions with respect to genes and dynamic interactions between genes. These include neural network models and their integration with gene network models. This area brings together knowledge from various scientific disciplines, such as computer and information science, neuroscience and cognitive science, genetics and molecular biology, as well as engineering.

Bayesian approaches to brain function investigate the capacity of the nervous system to operate in situations of uncertainty in a fashion that is close to the optimal prescribed by Bayesian statistics. This term is used in behavioural sciences and neuroscience and studies associated with this term often strive to explain the brain's cognitive abilities based on statistical principles. It is frequently assumed that the nervous system maintains internal probabilistic models that are updated by neural processing of sensory information using methods approximating those of Bayesian probability.

A Bayesian Confidence Propagation Neural Network (BCPNN) is an artificial neural network inspired by Bayes' theorem, which regards neural computation and processing as probabilistic inference. Neural unit activations represent probability ("confidence") in the presence of input features or categories, synaptic weights are based on estimated correlations and the spread of activation corresponds to calculating posterior probabilities. It was originally proposed by Anders Lansner and Örjan Ekeberg at KTH Royal Institute of Technology. This probabilistic neural network model can also be run in generative mode to produce spontaneous activations and temporal sequences.

The network of the human nervous system is composed of nodes that are connected by links. The connectivity may be viewed anatomically, functionally, or electrophysiologically. These are presented in several Wikipedia articles that include Connectionism, Biological neural network, Artificial neural network, Computational neuroscience, as well as in several books by Ascoli, G. A. (2002), Sterratt, D., Graham, B., Gillies, A., & Willshaw, D. (2011), Gerstner, W., & Kistler, W. (2002), and Rumelhart, J. L., McClelland, J. L., and PDP Research Group (1986) among others. The focus of this article is a comprehensive view of modeling a neural network. Once an approach based on the perspective and connectivity is chosen, the models are developed at microscopic, mesoscopic, or macroscopic (system) levels. Computational modeling refers to models that are developed using computing tools.

In neuroscience, predictive coding is a theory of brain function which postulates that the brain is constantly generating and updating a "mental model" of the environment. According to the theory, such a mental model is used to predict input signals from the senses that are then compared with the actual input signals from those senses. With the rising popularity of representation learning, the theory is being actively pursued and applied in machine learning and related fields.

Claudia Clopath is a Professor of Computational Neuroscience at Imperial College London and research leader at the Sainsbury Wellcome Centre for Neural Circuits and Behaviour. She develops mathematical models to predict synaptic plasticity for both medical applications and the design of human-like machines.

A neural network is a group of interconnected units called neurons that send signals to one another. Neurons can be either biological cells or mathematical models. While individual neurons are simple, many of them together in a network can perform complex tasks. There are two main types of neural network.