In mathematics, an inner product space is a real vector space or a complex vector space with an operation called an inner product. The inner product of two vectors in the space is a scalar, often denoted with angle brackets such as in . Inner products allow formal definitions of intuitive geometric notions, such as lengths, angles, and orthogonality of vectors. Inner product spaces generalize Euclidean vector spaces, in which the inner product is the dot product or scalar product of Cartesian coordinates. Inner product spaces of infinite dimension are widely used in functional analysis. Inner product spaces over the field of complex numbers are sometimes referred to as unitary spaces. The first usage of the concept of a vector space with an inner product is due to Giuseppe Peano, in 1898.

In mathematics, Legendre polynomials, named after Adrien-Marie Legendre (1782), are a system of complete and orthogonal polynomials with a vast number of mathematical properties and numerous applications. They can be defined in many ways, and the various definitions highlight different aspects as well as suggest generalizations and connections to different mathematical structures and physical and numerical applications.

In quantum physics, a wave function is a mathematical description of the quantum state of an isolated quantum system. The most common symbols for a wave function are the Greek letters ψ and Ψ. Wave functions are composed of complex numbers. For example, a wave function might assign a complex number to each point in a region of space. The Born rule provides the means to turn these complex probability amplitudes into actual probabilities. In one common form, it says that the squared modulus of a wave function that depends upon position is the probability density of measuring a particle as being at a given place. The integral of a wavefunction's squared modulus over all the system's degrees of freedom must be equal to 1, a condition called normalization. Since the wave function is complex-valued, only its relative phase and relative magnitude can be measured; its value does not, in isolation, tell anything about the magnitudes or directions of measurable observables. One has to apply quantum operators, whose eigenvalues correspond to sets of possible results of measurements, to the wave function ψ and calculate the statistical distributions for measurable quantities.

In mathematics, particularly in linear algebra, a skew-symmetricmatrix is a square matrix whose transpose equals its negative. That is, it satisfies the condition

The Chebyshev polynomials are two sequences of polynomials related to the cosine and sine functions, notated as and . They can be defined in several equivalent ways, one of which starts with trigonometric functions:

In linear algebra, two vectors in an inner product space are orthonormal if they are orthogonal unit vectors. A unit vector means that the vector has a length of 1, which is also known as normalized. Orthogonal means that the vectors are all perpendicular to each other. A set of vectors form an orthonormal set if all vectors in the set are mutually orthogonal and all of unit length. An orthonormal set which forms a basis is called an orthonormal basis.

In mathematics, particularly linear algebra, an orthonormal basis for an inner product space V with finite dimension is a basis for whose vectors are orthonormal, that is, they are all unit vectors and orthogonal to each other. For example, the standard basis for a Euclidean space is an orthonormal basis, where the relevant inner product is the dot product of vectors. The image of the standard basis under a rotation or reflection is also orthonormal, and every orthonormal basis for arises in this fashion.

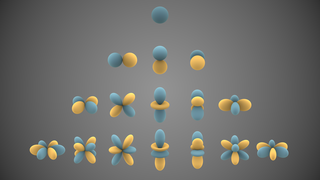

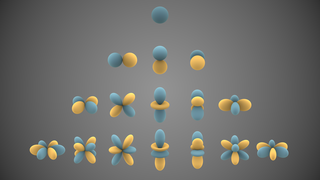

In mathematics and physical science, spherical harmonics are special functions defined on the surface of a sphere. They are often employed in solving partial differential equations in many scientific fields. A list of the spherical harmonics is available in Table of spherical harmonics.

A weight function is a mathematical device used when performing a sum, integral, or average to give some elements more "weight" or influence on the result than other elements in the same set. The result of this application of a weight function is a weighted sum or weighted average. Weight functions occur frequently in statistics and analysis, and are closely related to the concept of a measure. Weight functions can be employed in both discrete and continuous settings. They can be used to construct systems of calculus called "weighted calculus" and "meta-calculus".

In mathematical analysis, many generalizations of Fourier series have proved to be useful. They are all special cases of decompositions over an orthonormal basis of an inner product space. Here we consider that of square-integrable functions defined on an interval of the real line, which is important, among others, for interpolation theory.

In the theory of stochastic processes, the Karhunen–Loève theorem, also known as the Kosambi–Karhunen–Loève theorem states that a stochastic process can be represented as an infinite linear combination of orthogonal functions, analogous to a Fourier series representation of a function on a bounded interval. The transformation is also known as Hotelling transform and eigenvector transform, and is closely related to principal component analysis (PCA) technique widely used in image processing and in data analysis in many fields.

In linear algebra, the Gram matrix of a set of vectors in an inner product space is the Hermitian matrix of inner products, whose entries are given by the inner product . If the vectors are the columns of matrix then the Gram matrix is in the general case that the vector coordinates are complex numbers, which simplifies to for the case that the vector coordinates are real numbers.

In the mathematical fields of linear algebra and functional analysis, the orthogonal complement of a subspace W of a vector space V equipped with a bilinear form B is the set W⊥ of all vectors in V that are orthogonal to every vector in W. Informally, it is called the perp, short for perpendicular complement. It is a subspace of V.

Clenshaw–Curtis quadrature and Fejér quadrature are methods for numerical integration, or "quadrature", that are based on an expansion of the integrand in terms of Chebyshev polynomials. Equivalently, they employ a change of variables and use a discrete cosine transform (DCT) approximation for the cosine series. Besides having fast-converging accuracy comparable to Gaussian quadrature rules, Clenshaw–Curtis quadrature naturally leads to nested quadrature rules, which is important for both adaptive quadrature and multidimensional quadrature (cubature).

In mathematics, the secondary measure associated with a measure of positive density ρ when there is one, is a measure of positive density μ, turning the secondary polynomials associated with the orthogonal polynomials for ρ into an orthogonal system.

In mathematics, Hilbert spaces allow the methods of linear algebra and calculus to be generalized from (finite-dimensional) Euclidean vector spaces to spaces that may be infinite-dimensional. Hilbert spaces arise naturally and frequently in mathematics and physics, typically as function spaces. Formally, a Hilbert space is a vector space equipped with an inner product that induces a distance function for which the space is a complete metric space.

In mathematics, discrete Chebyshev polynomials, or Gram polynomials, are a type of discrete orthogonal polynomials used in approximation theory, introduced by Pafnuty Chebyshev and rediscovered by Gram. They were later found to be applicable to various algebraic properties of spin angular momentum.

In mathematics, an orthogonal polynomial sequence is a family of polynomials such that any two different polynomials in the sequence are orthogonal to each other under some inner product.

In machine learning, the radial basis function kernel, or RBF kernel, is a popular kernel function used in various kernelized learning algorithms. In particular, it is commonly used in support vector machine classification.

In mathematics, orthogonality is the generalization of the geometric notion of perpendicularity to the linear algebra of bilinear forms.