In mathematics, analytic geometry, also known as coordinate geometry or Cartesian geometry, is the study of geometry using a coordinate system. This contrasts with synthetic geometry.

In mathematics, an equation is a mathematical formula that expresses the equality of two expressions, by connecting them with the equals sign =. The word equation and its cognates in other languages may have subtly different meanings; for example, in French an équation is defined as containing one or more variables, while in English, any well-formed formula consisting of two expressions related with an equals sign is an equation.

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on observed data: thus the rule, the quantity of interest and its result are distinguished. For example, the sample mean is a commonly used estimator of the population mean.

Supervised learning (SL) is a paradigm in machine learning where input objects and a desired output value train a model. The training data is processed, building a function that maps new data on expected output values. An optimal scenario will allow for the algorithm to correctly determine output values for unseen instances. This requires the learning algorithm to generalize from the training data to unseen situations in a "reasonable" way. This statistical quality of an algorithm is measured through the so-called generalization error.

A parameter, generally, is any characteristic that can help in defining or classifying a particular system. That is, a parameter is an element of a system that is useful, or critical, when identifying the system, or when evaluating its performance, status, condition, etc.

In mathematics, a quadric or quadric surface (quadric hypersurface in higher dimensions), is a generalization of conic sections (ellipses, parabolas, and hyperbolas). It is a hypersurface (of dimension D) in a (D + 1)-dimensional space, and it is defined as the zero set of an irreducible polynomial of degree two in D + 1 variables; for example, D = 1 in the case of conic sections. When the defining polynomial is not absolutely irreducible, the zero set is generally not considered a quadric, although it is often called a degenerate quadric or a reducible quadric.

In statistics, completeness is a property of a statistic in relation to a parameterised model for a set of observed data.

In mathematics, conformal geometry is the study of the set of angle-preserving (conformal) transformations on a space.

In Vapnik–Chervonenkis theory, the Vapnik–Chervonenkis (VC) dimension is a measure of the size of a class of sets. The notion can be extended to classes of binary functions. It is defined as the cardinality of the largest set of points that the algorithm can shatter, which means the algorithm can always learn a perfect classifier for any labeling of at least one configuration of those data points. It was originally defined by Vladimir Vapnik and Alexey Chervonenkis.

Nonlinear dimensionality reduction, also known as manifold learning, refers to various related techniques that aim to project high-dimensional data onto lower-dimensional latent manifolds, with the goal of either visualizing the data in the low-dimensional space, or learning the mapping itself. The techniques described below can be understood as generalizations of linear decomposition methods used for dimensionality reduction, such as singular value decomposition and principal component analysis.

In mathematics, a parametric equation defines a group of quantities as functions of one or more independent variables called parameters. Parametric equations are commonly used to express the coordinates of the points that make up a geometric object such as a curve or surface, called a parametric curve and parametric surface, respectively. In such cases, the equations are collectively called a parametric representation, or parametric system, or parameterization of the object.

In statistics, a mixture model is a probabilistic model for representing the presence of subpopulations within an overall population, without requiring that an observed data set should identify the sub-population to which an individual observation belongs. Formally a mixture model corresponds to the mixture distribution that represents the probability distribution of observations in the overall population. However, while problems associated with "mixture distributions" relate to deriving the properties of the overall population from those of the sub-populations, "mixture models" are used to make statistical inferences about the properties of the sub-populations given only observations on the pooled population, without sub-population identity information. Mixture models are used for clustering, under the name model-based clustering, and also for density estimation.

Robust statistics are statistics which maintain their properties even if the underlying distributional assumptions are incorrect. Robust statistical methods have been developed for many common problems, such as estimating location, scale, and regression parameters. One motivation is to produce statistical methods that are not unduly affected by outliers. Another motivation is to provide methods with good performance when there are small departures from a parametric distribution. For example, robust methods work well for mixtures of two normal distributions with different standard deviations; under this model, non-robust methods like a t-test work poorly.

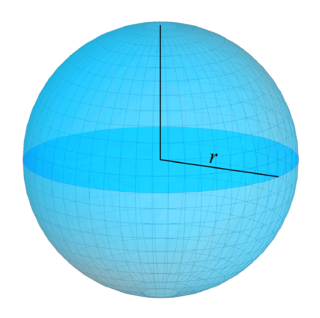

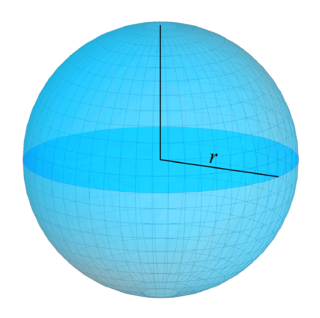

In geometry, a three-dimensional space is a mathematical space in which three values (coordinates) are required to determine the position of a point. Most commonly, it is the three-dimensional Euclidean space, that is, the Euclidean space of dimension three, which models physical space. More general three-dimensional spaces are called 3-manifolds. The term may also refer colloquially to a subset of space, a three-dimensional region, a solid figure.

In statistics, M-estimators are a broad class of extremum estimators for which the objective function is a sample average. Both non-linear least squares and maximum likelihood estimation are special cases of M-estimators. The definition of M-estimators was motivated by robust statistics, which contributed new types of M-estimators. However, M-estimators are not inherently robust, as is clear from the fact that they include maximum likelihood estimators, which are in general not robust. The statistical procedure of evaluating an M-estimator on a data set is called M-estimation.

In mathematics, a surface is a mathematical model of the common concept of a surface. It is a generalization of a plane, but, unlike a plane, it may be curved; this is analogous to a curve generalizing a straight line.

Lie sphere geometry is a geometrical theory of planar or spatial geometry in which the fundamental concept is the circle or sphere. It was introduced by Sophus Lie in the nineteenth century. The main idea which leads to Lie sphere geometry is that lines should be regarded as circles of infinite radius and that points in the plane should be regarded as circles of zero radius.

In statistics and econometrics, extremum estimators are a wide class of estimators for parametric models that are calculated through maximization of a certain objective function, which depends on the data. The general theory of extremum estimators was developed by Amemiya (1985).

In geometry, an intersection is a point, line, or curve common to two or more objects. The simplest case in Euclidean geometry is the line–line intersection between two distinct lines, which either is one point or does not exist. Other types of geometric intersection include:

In geometry, an intersection curve is a curve that is common to two geometric objects. In the simplest case, the intersection of two non-parallel planes in Euclidean 3-space is a line. In general, an intersection curve consists of the common points of two transversally intersecting surfaces, meaning that at any common point the surface normals are not parallel. This restriction excludes cases where the surfaces are touching or have surface parts in common.