In computational complexity theory, a decision problem is PSPACE-complete if it can be solved using an amount of memory that is polynomial in the input length and if every other problem that can be solved in polynomial space can be transformed to it in polynomial time. The problems that are PSPACE-complete can be thought of as the hardest problems in PSPACE, the class of decision problems solvable in polynomial space, because a solution to any one such problem could easily be used to solve any other problem in PSPACE.

In Boolean logic, a formula is in conjunctive normal form (CNF) or clausal normal form if it is a conjunction of one or more clauses, where a clause is a disjunction of literals; otherwise put, it is a product of sums or an AND of ORs. As a canonical normal form, it is useful in automated theorem proving and circuit theory.

Combinatorial optimization is a subfield of mathematical optimization that consists of finding an optimal object from a finite set of objects, where the set of feasible solutions is discrete or can be reduced to a discrete set. Typical combinatorial optimization problems are the travelling salesman problem ("TSP"), the minimum spanning tree problem ("MST"), and the knapsack problem. In many such problems, such as the ones previously mentioned, exhaustive search is not tractable, and so specialized algorithms that quickly rule out large parts of the search space or approximation algorithms must be resorted to instead.

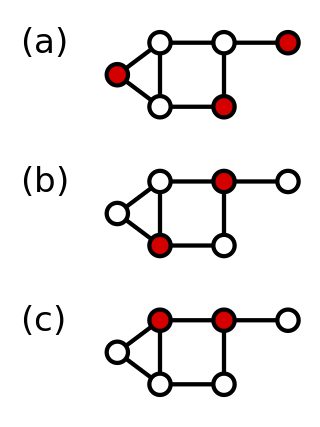

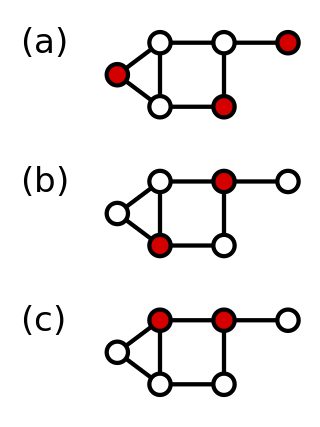

In graph theory, a vertex cover of a graph is a set of vertices that includes at least one endpoint of every edge of the graph.

In computer science and operations research, approximation algorithms are efficient algorithms that find approximate solutions to optimization problems with provable guarantees on the distance of the returned solution to the optimal one. Approximation algorithms naturally arise in the field of theoretical computer science as a consequence of the widely believed P ≠ NP conjecture. Under this conjecture, a wide class of optimization problems cannot be solved exactly in polynomial time. The field of approximation algorithms, therefore, tries to understand how closely it is possible to approximate optimal solutions to such problems in polynomial time. In an overwhelming majority of the cases, the guarantee of such algorithms is a multiplicative one expressed as an approximation ratio or approximation factor i.e., the optimal solution is always guaranteed to be within a (predetermined) multiplicative factor of the returned solution. However, there are also many approximation algorithms that provide an additive guarantee on the quality of the returned solution. A notable example of an approximation algorithm that provides both is the classic approximation algorithm of Lenstra, Shmoys and Tardos for scheduling on unrelated parallel machines.

In computer science, parameterized complexity is a branch of computational complexity theory that focuses on classifying computational problems according to their inherent difficulty with respect to multiple parameters of the input or output. The complexity of a problem is then measured as a function of those parameters. This allows the classification of NP-hard problems on a finer scale than in the classical setting, where the complexity of a problem is only measured as a function of the number of bits in the input. This appears to have been first demonstrated in Gurevich, Stockmeyer & Vishkin (1984). The first systematic work on parameterized complexity was done by Downey & Fellows (1999).

In graph theory, a dominating set for a graph G is a subset D of its vertices, such that any vertex of G is in D, or has a neighbor in D. The domination numberγ(G) is the number of vertices in a smallest dominating set for G.

Set packing is a classical NP-complete problem in computational complexity theory and combinatorics, and was one of Karp's 21 NP-complete problems. Suppose one has a finite set S and a list of subsets of S. Then, the set packing problem asks if some k subsets in the list are pairwise disjoint.

In computational complexity theory, the class APX is the set of NP optimization problems that allow polynomial-time approximation algorithms with approximation ratio bounded by a constant. In simple terms, problems in this class have efficient algorithms that can find an answer within some fixed multiplicative factor of the optimal answer.

In computational complexity theory, the class IP is the class of problems solvable by an interactive proof system. It is equal to the class PSPACE. The result was established in a series of papers: the first by Lund, Karloff, Fortnow, and Nisan showed that co-NP had multiple prover interactive proofs; and the second, by Shamir, employed their technique to establish that IP=PSPACE. The result is a famous example where the proof does not relativize.

MAX-3SAT is a problem in the computational complexity subfield of computer science. It generalises the Boolean satisfiability problem (SAT) which is a decision problem considered in complexity theory. It is defined as:

In computational complexity theory, a branch of computer science, Schaefer's dichotomy theorem, proved by Thomas Jerome Schaefer, states necessary and sufficient conditions under which a finite set S of relations over the Boolean domain yields polynomial-time or NP-complete problems when the relations of S are used to constrain some of the propositional variables. It is called a dichotomy theorem because the complexity of the problem defined by S is either in P or is NP-complete, as opposed to one of the classes of intermediate complexity that is known to exist by Ladner's theorem.

In computational complexity theory, Polynomial Local Search (PLS) is a complexity class that models the difficulty of finding a locally optimal solution to an optimization problem. The main characteristics of problems that lie in PLS are that the cost of a solution can be calculated in polynomial time and the neighborhood of a solution can be searched in polynomial time. Therefore it is possible to verify whether or not a solution is a local optimum in polynomial time. Furthermore, depending on the problem and the algorithm that is used for solving the problem, it might be faster to find a local optimum instead of a global optimum.

Interval scheduling is a class of problems in computer science, particularly in the area of algorithm design. The problems consider a set of tasks. Each task is represented by an interval describing the time in which it needs to be processed by some machine. For instance, task A might run from 2:00 to 5:00, task B might run from 4:00 to 10:00 and task C might run from 9:00 to 11:00. A subset of intervals is compatible if no two intervals overlap on the machine/resource. For example, the subset {A,C} is compatible, as is the subset {B}; but neither {A,B} nor {B,C} are compatible subsets, because the corresponding intervals within each subset overlap.

In a graph, a maximum cut is a cut whose size is at least the size of any other cut. That is, it is a partition of the graph's vertices into two complementary sets S and T, such that the number of edges between S and T is as large as possible. Finding such a cut is known as the max-cut problem.

In the mathematical discipline of graph theory, a 3-dimensional matching is a generalization of bipartite matching to 3-partite hypergraphs, which consist of hyperedges each of which contains 3 vertices.

In the study of graph algorithms, Courcelle's theorem is the statement that every graph property definable in the monadic second-order logic of graphs can be decided in linear time on graphs of bounded treewidth. The result was first proved by Bruno Courcelle in 1990 and independently rediscovered by Borie, Parker & Tovey (1992). It is considered the archetype of algorithmic meta-theorems.

In computational complexity theory and combinatorics, the token reconfiguration problem is a reconfiguration problem on a graph with both an initial and desired state for tokens.

In network theory, the Wiener connector is a means of maximizing efficiency in connecting specified "query vertices" in a network. Given a connected, undirected graph and a set of query vertices in a graph, the minimum Wiener connector is an induced subgraph that connects the query vertices and minimizes the sum of shortest path distances among all pairs of vertices in the subgraph. In combinatorial optimization, the minimum Wiener connector problem is the problem of finding the minimum Wiener connector. It can be thought of as a version of the classic Steiner tree problem, where instead of minimizing the size of the tree, the objective is to minimize the distances in the subgraph.

In the mathematical fields of graph theory and finite model theory, the logic of graphs deals with formal specifications of graph properties using sentences of mathematical logic. There are several variations in the types of logical operation that can be used in these sentences. The first-order logic of graphs concerns sentences in which the variables and predicates concern individual vertices and edges of a graph, while monadic second-order graph logic allows quantification over sets of vertices or edges. Logics based on least fixed point operators allow more general predicates over tuples of vertices, but these predicates can only be constructed through fixed-point operators, restricting their power.