An audio file format is a file format for storing digital audio data on a computer system. The bit layout of the audio data is called the audio coding format and can be uncompressed, or compressed to reduce the file size, often using lossy compression. The data can be a raw bitstream in an audio coding format, but it is usually embedded in a container format or an audio data format with defined storage layer.

The Au file format is a simple audio file format introduced by Sun Microsystems. The format was common on NeXT systems and on early Web pages. Originally it was headerless, being simply 8-bit μ-law-encoded data at an 8000 Hz sample rate. Hardware from other vendors often used sample rates as high as 8192 Hz, often integer multiples of video clock signal frequencies. Newer files have a header that consists of six unsigned 32-bit words, an optional information chunk which is always of non-zero size, and then the data.

A codec is a device or computer program that encodes or decodes a data stream or signal. Codec is a portmanteau of coder/decoder.

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder.

In information technology, lossy compression or irreversible compression is the class of data compression methods that uses inexact approximations and partial data discarding to represent the content. These techniques are used to reduce data size for storing, handling, and transmitting content. The different versions of the photo of the cat on this page show how higher degrees of approximation create coarser images as more details are removed. This is opposed to lossless data compression which does not degrade the data. The amount of data reduction possible using lossy compression is much higher than using lossless techniques.

Adaptive Transform Acoustic Coding (ATRAC) is a family of proprietary audio compression algorithms developed by Sony. MiniDisc was the first commercial product to incorporate ATRAC, in 1992. ATRAC allowed a relatively small disc like MiniDisc to have the same running time as CD while storing audio information with minimal perceptible loss in quality. Improvements to the codec in the form of ATRAC3, ATRAC3plus, and ATRAC Advanced Lossless followed in 1999, 2002, and 2006 respectively.

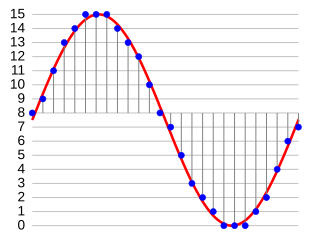

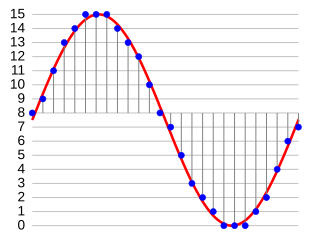

Digital audio is a representation of sound recorded in, or converted into, digital form. In digital audio, the sound wave of the audio signal is typically encoded as numerical samples in a continuous sequence. For example, in CD audio, samples are taken 44,100 times per second, each with 16-bit sample depth. Digital audio is also the name for the entire technology of sound recording and reproduction using audio signals that have been encoded in digital form. Following significant advances in digital audio technology during the 1970s and 1980s, it gradually replaced analog audio technology in many areas of audio engineering, record production and telecommunications in the 1990s and 2000s.

G.711 is a narrowband audio codec originally designed for use in telephony that provides toll-quality audio at 64 kbit/s. It is an ITU-T standard (Recommendation) for audio encoding, titled Pulse code modulation (PCM) of voice frequencies released for use in 1972.

Sound can be recorded and stored and played using either digital or analog techniques. Both techniques introduce errors and distortions in the sound, and these methods can be systematically compared. Musicians and listeners have argued over the superiority of digital versus analog sound recordings. Arguments for analog systems include the absence of fundamental error mechanisms which are present in digital audio systems, including aliasing and associated anti-aliasing filter implementation, jitter and quantization noise. Advocates of digital point to the high levels of performance possible with digital audio, including excellent linearity in the audible band and low levels of noise and distortion.

In telecommunications and computing, bit rate is the number of bits that are conveyed or processed per unit of time.

Transcoding is the direct digital-to-digital conversion of one encoding to another, such as for video data files, audio files, or character encoding. This is usually done in cases where a target device does not support the format or has limited storage capacity that mandates a reduced file size, or to convert incompatible or obsolete data to a better-supported or modern format.

WavPack is a free and open-source lossless audio compression format and application implementing the format. It is unique in the way that it supports hybrid audio compression alongside normal compression which is similar to how FLAC works. It also supports compressing a wide variety of lossless formats, including various variants of PCM and also DSD as used in SACDs, together with its support for surround audio.

Generation loss is the loss of quality between subsequent copies or transcodes of data. Anything that reduces the quality of the representation when copying, and would cause further reduction in quality on making a copy of the copy, can be considered a form of generation loss. File size increases are a common result of generation loss, as the introduction of artifacts may actually increase the entropy of the data through each generation.

Dolby Digital Plus, also known as Enhanced AC-3, is a digital audio compression scheme developed by Dolby Labs for the transport and storage of multi-channel digital audio. It is a successor to Dolby Digital (AC-3), and has a number of improvements over that codec, including support for a wider range of data rates, an increased channel count, and multi-program support, as well as additional tools (algorithms) for representing compressed data and counteracting artifacts. Whereas Dolby Digital (AC-3) supports up to five full-bandwidth audio channels at a maximum bitrate of 640 kbit/s, E-AC-3 supports up to 15 full-bandwidth audio channels at a maximum bitrate of 6.144 Mbit/s.

DTS-HD Master Audio is a multi-channel, lossless audio codec developed by DTS as an extension of the lossy DTS Coherent Acoustics codec. Rather than being an entirely new coding mechanism, DTS-HD MA encodes an audio master in lossy DTS first, then stores a concurrent stream of supplementary data representing whatever the DTS encoder discarded. This gives DTS-HD MA a lossy "core" able to be played back by devices that cannot decode the more complex lossless audio. DTS-HD MA's primary application is audio storage and playback for Blu-ray Disc media; it competes in this respect with Dolby TrueHD, another lossless surround format.

In digital audio using pulse-code modulation (PCM), bit depth is the number of bits of information in each sample, and it directly corresponds to the resolution of each sample. Examples of bit depth include Compact Disc Digital Audio, which uses 16 bits per sample, and DVD-Audio and Blu-ray Disc, which can support up to 24 bits per sample.

Adaptive differential pulse-code modulation (ADPCM) is a variant of differential pulse-code modulation (DPCM) that varies the size of the quantization step, to allow further reduction of the required data bandwidth for a given signal-to-noise ratio.

aptX is a family of proprietary audio codec compression algorithms owned by Qualcomm, with a heavy emphasis on wireless audio applications.

Pulse-code modulation (PCM) is a method used to digitally represent analog signals. It is the standard form of digital audio in computers, compact discs, digital telephony and other digital audio applications. In a PCM stream, the amplitude of the analog signal is sampled at uniform intervals, and each sample is quantized to the nearest value within a range of digital steps.