Alternative assessment is also known under various other terms, including:

A standardized test is a test that is administered and scored in a consistent, or "standard", manner. Standardized tests are designed in such a way that the questions and interpretations are consistent and are administered and scored in a predetermined, standard manner.

Educational assessment or educational evaluation is the systematic process of documenting and using empirical data on the knowledge, skill, attitudes, aptitude and beliefs to refine programs and improve student learning. Assessment data can be obtained from directly examining student work to assess the achievement of learning outcomes or can be based on data from which one can make inferences about learning. Assessment is often used interchangeably with test, but not limited to tests. Assessment can focus on the individual learner, the learning community, a course, an academic program, the institution, or the educational system as a whole. The word "assessment" came into use in an educational context after the Second World War.

The National Science Education Standards (NSES) represent guidelines for the science education in primary and secondary schools in the United States, as established by the National Research Council in 1996. These provide a set of goals for teachers to set for their students and for administrators to provide professional development. The NSES influence various states' own science learning standards, and statewide standardized testing.

A concept inventory is a criterion-referenced test designed to help determine whether a student has an accurate working knowledge of a specific set of concepts. Historically, concept inventories have been in the form of multiple-choice tests in order to aid interpretability and facilitate administration in large classes. Unlike a typical, teacher-authored multiple-choice test, questions and response choices on concept inventories are the subject of extensive research. The aims of the research include ascertaining (a) the range of what individuals think a particular question is asking and (b) the most common responses to the questions. Concept inventories are evaluated to ensure test reliability and validity. In its final form, each question includes one correct answer and several distractors.

The Washington Assessment of Student Learning (WASL) was a standardized educational assessment system given as the primary assessment in the state of Washington from spring 1997 to summer 2009. The WASL was also used as a high school graduation examination beginning in the spring of 2006 and ending in 2009. It has been replaced by the High School Proficiency Exam (HSPE), the Measurements of Students Progress (MSP) for grades 3–8, and later the Smarter Balanced Assessment (SBAC). The WASL assessment consisted of examinations over four subjects with four different types of questions. It was given to students from third through eighth grades and tenth grade. Third and sixth graders were tested in reading and math; fourth and seventh graders in math, reading and writing. Fifth and eighth graders were tested in reading, math and science. The high school assessment, given during a student's tenth grade year, contained all four subjects.

In the realm of US education, a rubric is a "scoring guide used to evaluate the quality of students' constructed responses" according to James Popham. In simpler terms, it serves as a set of criteria for grading assignments. Typically presented in table format, rubrics contain evaluative criteria, quality definitions for various levels of achievement, and a scoring strategy. They play a dual role for teachers in marking assignments and for students in planning their work.

Mastery learning is an instructional strategy and educational philosophy, first formally proposed by Benjamin Bloom in 1968. Mastery learning maintains that students must achieve a level of mastery in prerequisite knowledge before moving forward to learn subsequent information. If a student does not achieve mastery on the test, they are given additional support in learning and reviewing the information and then tested again. This cycle continues until the learner accomplishes mastery, and they may then move on to the next stage. In a self-paced online learning environment, students study the material and take assessments. If they make mistakes, the system provides insightful explanations and directs them to revisit the relevant sections. They then answer different questions on the same material, and this cycle repeats until they reach the established mastery threshold. Only then can they move on to subsequent learning modules, assessments, or certifications.

CLAS was a test and a standards-based assessment based on Outcomes Based Education principles given in California in the early 1990s. It was based on concepts of new standards such as whole language and reform mathematics. Instead of multiple choice tests with one correct answer, it used open written responses that were graded according to rubrics. Test takers would have to write about passages of literature that they were asked to read and relate the passage to their own experiences, or to explain how they found solutions to math problems that they were asked to solve. Such tests were thought to be fairer to students of all abilities.

Holistic grading or holistic scoring, in standards-based education, is an approach to scoring essays using a simple grading structure that bases a grade on a paper's overall quality. This type of grading, which is also described as nonreductionist grading, contrasts with analytic grading, which takes more factors into account when assigning a grade. Holistic grading can also be used to assess classroom-based work. Rather than counting errors, a paper is judged holistically and often compared to an anchor paper to evaluate if it meets a writing standard. It differs from other methods of scoring written discourse in two basic ways. It treats the composition as a whole, not assigning separate values to different parts of the writing. And it uses two or more raters, with the final score derived from their independent scores. Holistic scoring has gone by other names: "non-analytic," "overall quality," "general merit," "general impression," "rapid impression." Although the value and validation of the system are a matter of debate, holistic scoring of writing is still in wide application.

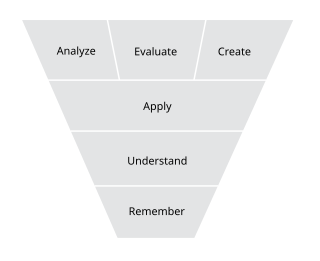

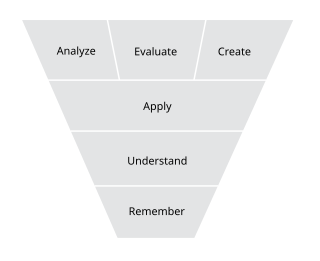

Higher-order thinking, also known as higher order thinking skills (HOTS), is a concept applied in relation to education reform and based on learning taxonomies. The idea is that some types of learning require more cognitive processing than others, but also have more generalized benefits. In Bloom's taxonomy, for example, skills involving analysis, evaluation and synthesis are thought to be of a higher order than the learning of facts and concepts using lower-order thinking skills, which require different learning and teaching methods. Higher-order thinking involves the learning of complex judgmental skills such as critical thinking and problem solving.

Formative assessment, formative evaluation, formative feedback, or assessment for learning, including diagnostic testing, is a range of formal and informal assessment procedures conducted by teachers during the learning process in order to modify teaching and learning activities to improve student attainment. The goal of a formative assessment is to monitor student learning to provide ongoing feedback that can help students identify their strengths and weaknesses and target areas that need work. It also helps faculty recognize where students are struggling and address problems immediately. It typically involves qualitative feedback for both student and teacher that focuses on the details of content and performance. It is commonly contrasted with summative assessment, which seeks to monitor educational outcomes, often for purposes of external accountability.

The Connecticut Mastery Test, or CMT, is a test administered to students in grades 3 through 8. The CMT tests students in mathematics, reading comprehension, writing, and science. The other major standardized test administered to schoolchildren in Connecticut is the Connecticut Academic Performance Test, or CAPT, which is given in grade 10. Until the 2005–2006 school year, the CMT was administered in the fall; now it is given in the spring.

Education reform in the United States since the 1980s has been largely driven by the setting of academic standards for what students should know and be able to do. These standards can then be used to guide all other system components. The SBE reform movement calls for clear, measurable standards for all school students. Rather than norm-referenced rankings, a standards-based system measures each student against the concrete standard. Curriculum, assessments, and professional development are aligned to the standards.

An anchor paper is a sample essay response to an assignment or test question requiring an essay, primarily in an educational effort. Unlike more traditional educational assessments such as multiple choice, essays cannot be graded with an answer key, as no strictly correct or incorrect solution exists. The anchor paper provides an example to the person reviewing or grading the assignment of a well-written response to the essay prompt. Sometimes examiners prepare a range of anchor papers, to provide examples of responses at different levels of merit.

Corrective feedback is a frequent practice in the field of learning and achievement. It typically involves a learner receiving either formal or informal feedback on their understanding or performance on various tasks by an agent such as teacher, employer or peer(s). To successfully deliver corrective feedback, it needs to be nonevaluative, supportive, timely, and specific.

Differentiated instruction and assessment, also known as differentiated learning or, in education, simply, differentiation, is a framework or philosophy for effective teaching that involves providing all students within their diverse classroom community of learners a range of different avenues for understanding new information in terms of: acquiring content; processing, constructing, or making sense of ideas; and developing teaching materials and assessment measures so that all students within a classroom can learn effectively, regardless of differences in their ability. Differentiated instruction means using different tools, content, and due process in order to successfully reach all individuals. Differentiated instruction, according to Carol Ann Tomlinson, is the process of "ensuring that what a student learns, how he or she learns it, and how the student demonstrates what he or she has learned is a match for that student's readiness level, interests, and preferred mode of learning." According to Boelens et al. (2018), differentiation can be on two different levels: the administration level and the classroom level. The administration level takes the socioeconomic status and gender of students into consideration. At the classroom level, differentiation revolves around content, processing, product, and effects. On the content level, teachers adapt what they are teaching to meet the needs of students. This can mean making content more challenging or simplified for students based on their levels. The process of learning can be differentiated as well. Teachers may choose to teach individually at a time, assign problems to small groups, partners or the whole group depending on the needs of the students. By differentiating product, teachers decide how students will present what they have learned. This may take the form of videos, graphic organizers, photo presentations, writing, and oral presentations. All these take place in a safe classroom environment where students feel respected and valued—effects.

Teacher quality assessment commonly includes reviews of qualifications, tests of teacher knowledge, observations of practice, and measurements of student learning gains. Assessments of teacher quality are currently used for policymaking, employment and tenure decisions, teacher evaluations, merit pay awards, and as data to inform the professional growth of teachers.

Writing assessment refers to an area of study that contains theories and practices that guide the evaluation of a writer's performance or potential through a writing task. Writing assessment can be considered a combination of scholarship from composition studies and measurement theory within educational assessment. Writing assessment can also refer to the technologies and practices used to evaluate student writing and learning. An important consequence of writing assessment is that the type and manner of assessment may impact writing instruction, with consequences for the character and quality of that instruction.

The Framework for Authentic Intellectual Work (AIW) is an evaluative tool used by educators of all subjects at the elementary and secondary levels to assess the quality of classroom instruction, assignments, and student work. The framework was founded by Dr. Dana L. Carmichael, Dr. M. Bruce King, and Dr. Fred M. Newmann. The purpose of the framework is to promote student production of genuine and rigorous work that resembles the complex work of adults, which identifies three main criteria for student learning, and provides standards accompanied by scaled rubrics for classroom instruction, assignments, and student work. The standards and rubrics are meant to support teachers in the promotion of genuine and rigorous work, as well as guide professional development and collaboration.