Basic calibration process

Purpose and scope

The calibration process begins with the design of the measuring instrument that needs to be calibrated. The design has to be able to "hold a calibration" through its calibration interval. In other words, the design has to be capable of measurements that are "within engineering tolerance" when used within the stated environmental conditions over some reasonable period of time. [6] Having a design with these characteristics increases the likelihood of the actual measuring instruments performing as expected. Basically, the purpose of calibration is for maintaining the quality of measurement as well as to ensure the proper working of particular instrument.

Frequency

The exact mechanism for assigning tolerance values varies by country and as per the industry type. The measuring of equipment is manufacturer generally assigns the measurement tolerance, suggests a calibration interval (CI) and specifies the environmental range of use and storage. The using organization generally assigns the actual calibration interval, which is dependent on this specific measuring equipment's likely usage level. The assignment of calibration intervals can be a formal process based on the results of previous calibrations. The standards themselves are not clear on recommended CI values: [7]

- ISO 17025 [3]

- "A calibration certificate (or calibration label) shall not contain any recommendation on the calibration interval except where this has been agreed with the customer. This requirement may be superseded by legal regulations.”

- ANSI/NCSL Z540 [8]

- "...shall be calibrated or verified at periodic intervals established and maintained to assure acceptable reliability..."

- ISO-9001 [2]

- "Where necessary to ensure valid results, measuring equipment shall...be calibrated or verified at specified intervals, or prior to use...”

- MIL-STD-45662A [9]

- "... shall be calibrated at periodic intervals established and maintained to assure acceptable accuracy and reliability...Intervals shall be shortened or may be lengthened, by the contractor, when the results of previous calibrations indicate that such action is appropriate to maintain acceptable reliability."

Standards required and accuracy

The next step is defining the calibration process. The selection of a standard or standards is the most visible part of the calibration process. Ideally, the standard has less than 1/4 of the measurement uncertainty of the device being calibrated. When this goal is met, the accumulated measurement uncertainty of all of the standards involved is considered to be insignificant when the final measurement is also made with the 4:1 ratio. [10] This ratio was probably first formalized in Handbook 52 that accompanied MIL-STD-45662A, an early US Department of Defense metrology program specification. It was 10:1 from its inception in the 1950s until the 1970s, when advancing technology made 10:1 impossible for most electronic measurements. [11]

Maintaining a 4:1 accuracy ratio with modern equipment is difficult. The test equipment being calibrated can be just as accurate as the working standard. [10] If the accuracy ratio is less than 4:1, then the calibration tolerance can be reduced to compensate. When 1:1 is reached, only an exact match between the standard and the device being calibrated is a completely correct calibration. Another common method for dealing with this capability mismatch is to reduce the accuracy of the device being calibrated.

For example, a gauge with 3% manufacturer-stated accuracy can be changed to 4% so that a 1% accuracy standard can be used at 4:1. If the gauge is used in an application requiring 16% accuracy, having the gauge accuracy reduced to 4% will not affect the accuracy of the final measurements. This is called a limited calibration. But if the final measurement requires 10% accuracy, then the 3% gauge never can be better than 3.3:1. Then perhaps adjusting the calibration tolerance for the gauge would be a better solution. If the calibration is performed at 100 units, the 1% standard would actually be anywhere between 99 and 101 units. The acceptable values of calibrations where the test equipment is at the 4:1 ratio would be 96 to 104 units, inclusive. Changing the acceptable range to 97 to 103 units would remove the potential contribution of all of the standards and preserve a 3.3:1 ratio. Continuing, a further change to the acceptable range to 98 to 102 restores more than a 4:1 final ratio.

This is a simplified example. The mathematics of the example can be challenged. It is important that whatever thinking guided this process in an actual calibration be recorded and accessible. Informality contributes to tolerance stacks and other difficult to diagnose post calibration problems.

Also in the example above, ideally the calibration value of 100 units would be the best point in the gauge's range to perform a single-point calibration. It may be the manufacturer's recommendation or it may be the way similar devices are already being calibrated. Multiple point calibrations are also used. Depending on the device, a zero unit state, the absence of the phenomenon being measured, may also be a calibration point. Or zero may be resettable by the user-there are several variations possible. Again, the points to use during calibration should be recorded.

There may be specific connection techniques between the standard and the device being calibrated that may influence the calibration. For example, in electronic calibrations involving analog phenomena, the impedance of the cable connections can directly influence the result.

Manual and automatic calibrations

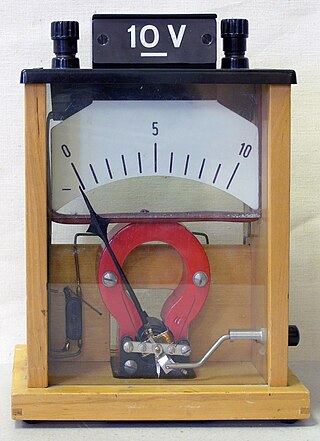

Calibration methods for modern devices can be manual or automatic.

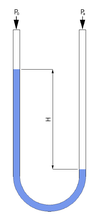

As an example, a manual process may be used for calibration of a pressure gauge. The procedure requires multiple steps, [12] to connect the gauge under test to a reference master gauge and an adjustable pressure source, to apply fluid pressure to both reference and test gauges at definite points over the span of the gauge, and to compare the readings of the two. The gauge under test may be adjusted to ensure its zero point and response to pressure comply as closely as possible to the intended accuracy. Each step of the process requires manual record keeping.

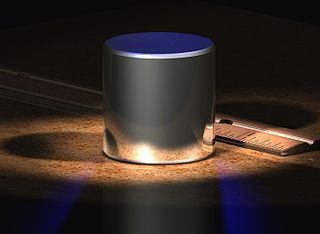

An automatic pressure calibrator [13] is a device that combines an electronic control unit, a pressure intensifier used to compress a gas such as Nitrogen, a pressure transducer used to detect desired levels in a hydraulic accumulator, and accessories such as liquid traps and gauge fittings. An automatic system may also include data collection facilities to automate the gathering of data for record keeping.

Process description and documentation

All of the information above is collected in a calibration procedure, which is a specific test method. These procedures capture all of the steps needed to perform a successful calibration. The manufacturer may provide one or the organization may prepare one that also captures all of the organization's other requirements. There are clearinghouses for calibration procedures such as the Government-Industry Data Exchange Program (GIDEP) in the United States.

This exact process is repeated for each of the standards used until transfer standards, certified reference materials and/or natural physical constants, the measurement standards with the least uncertainty in the laboratory, are reached. This establishes the traceability of the calibration.

See Metrology for other factors that are considered during calibration process development.

After all of this, individual instruments of the specific type discussed above can finally be calibrated. The process generally begins with a basic damage check. Some organizations such as nuclear power plants collect "as-found" calibration data before any routine maintenance is performed. After routine maintenance and deficiencies detected during calibration are addressed, an "as-left" calibration is performed.

More commonly, a calibration technician is entrusted with the entire process and signs the calibration certificate, which documents the completion of a successful calibration. The basic process outlined above is a difficult and expensive challenge. The cost for ordinary equipment support is generally about 10% of the original purchase price on a yearly basis, as a commonly accepted rule-of-thumb. Exotic devices such as scanning electron microscopes, gas chromatograph systems and laser interferometer devices can be even more costly to maintain.

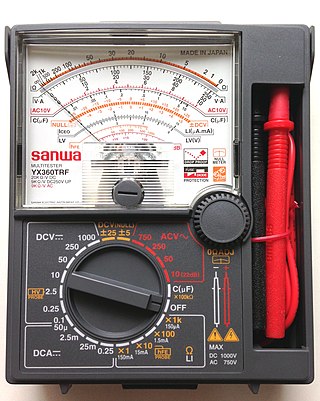

The 'single measurement' device used in the basic calibration process description above does exist. But, depending on the organization, the majority of the devices that need calibration can have several ranges and many functionalities in a single instrument. A good example is a common modern oscilloscope. There easily could be 200,000 combinations of settings to completely calibrate and limitations on how much of an all-inclusive calibration can be automated.

To prevent unauthorized access to an instrument tamper-proof seals are usually applied after calibration. The picture of the oscilloscope rack shows these, and prove that the instrument has not been removed since it was last calibrated as they will possible unauthorized to the adjusting elements of the instrument. There also are labels showing the date of the last calibration and when the calibration interval dictates when the next one is needed. Some organizations also assign unique identification to each instrument to standardize the record keeping and keep track of accessories that are integral to a specific calibration condition.

When the instruments being calibrated are integrated with computers, the integrated computer programs and any calibration corrections are also under control.