Artificial intelligence (AI), in its broadest sense, is intelligence exhibited by machines, particularly computer systems. It is a field of research in computer science that develops and studies methods and software that enable machines to perceive their environment and uses learning and intelligence to take actions that maximize their chances of achieving defined goals. Such machines may be called AIs.

Cognitive science is the interdisciplinary, scientific study of the mind and its processes. It examines the nature, the tasks, and the functions of cognition. Mental faculties of concern to cognitive scientists include language, perception, memory, attention, reasoning, and emotion; to understand these faculties, cognitive scientists borrow from fields such as linguistics, psychology, artificial intelligence, philosophy, neuroscience, and anthropology. The typical analysis of cognitive science spans many levels of organization, from learning and decision to logic and planning; from neural circuitry to modular brain organization. One of the fundamental concepts of cognitive science is that "thinking can best be understood in terms of representational structures in the mind and computational procedures that operate on those structures."

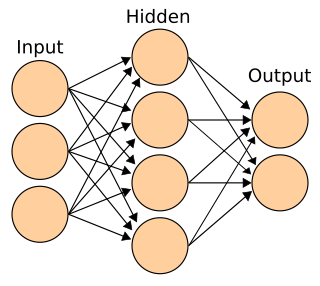

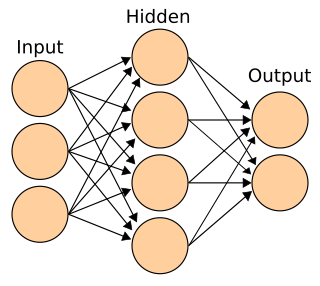

Connectionism is the name of an approach to the study of human mental processes and cognition that utilizes mathematical models known as connectionist networks or artificial neural networks. Connectionism has had many 'waves' since its beginnings.

In artificial intelligence, symbolic artificial intelligence is the term for the collection of all methods in artificial intelligence research that are based on high-level symbolic (human-readable) representations of problems, logic and search. Symbolic AI used tools such as logic programming, production rules, semantic nets and frames, and it developed applications such as knowledge-based systems, symbolic mathematics, automated theorem provers, ontologies, the semantic web, and automated planning and scheduling systems. The Symbolic AI paradigm led to seminal ideas in search, symbolic programming languages, agents, multi-agent systems, the semantic web, and the strengths and limitations of formal knowledge and reasoning systems.

Neuromorphic computing is an approach to computing that is inspired by the structure and function of the human brain. A neuromorphic computer/chip is any device that uses physical artificial neurons to do computations. In recent times, the term neuromorphic has been used to describe analog, digital, mixed-mode analog/digital VLSI, and software systems that implement models of neural systems. The implementation of neuromorphic computing on the hardware level can be realized by oxide-based memristors, spintronic memories, threshold switches, transistors, among others. Training software-based neuromorphic systems of spiking neural networks can be achieved using error backpropagation, e.g., using Python based frameworks such as snnTorch, or using canonical learning rules from the biological learning literature, e.g., using BindsNet.

In artificial intelligence (AI), commonsense reasoning is a human-like ability to make presumptions about the type and essence of ordinary situations humans encounter every day. These assumptions include judgments about the nature of physical objects, taxonomic properties, and peoples' intentions. A device that exhibits commonsense reasoning might be capable of drawing conclusions that are similar to humans' folk psychology and naive physics.

The expression computational intelligence (CI) usually refers to the ability of a computer to learn a specific task from data or experimental observation. Even though it is commonly considered a synonym of soft computing, there is still no commonly accepted definition of computational intelligence.

A cognitive architecture refers to both a theory about the structure of the human mind and to a computational instantiation of such a theory used in the fields of artificial intelligence (AI) and computational cognitive science. The formalized models can be used to further refine a comprehensive theory of cognition and as a useful artificial intelligence program. Successful cognitive architectures include ACT-R and SOAR. The research on cognitive architectures as software instantiation of cognitive theories was initiated by Allen Newell in 1990.

The Sally–Anne test is a psychological test, used in developmental psychology to measure a person's social cognitive ability to attribute false beliefs to others. The flagship implementation of the Sally–Anne test was by Simon Baron-Cohen, Alan M. Leslie, and Uta Frith (1985); in 1988, Leslie and Frith repeated the experiment with human actors and found similar results.

Hybrid intelligent system denotes a software system which employs, in parallel, a combination of methods and techniques from artificial intelligence subfields, such as:

Dov M. Gabbay is an Israeli logician. He is Augustus De Morgan Professor Emeritus of Logic at the Group of Logic, Language and Computation, Department of Computer Science, King's College London.

The following outline is provided as an overview of and topical guide to artificial intelligence:

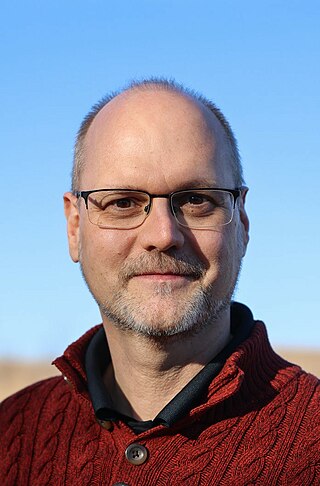

Ron Sun is a cognitive scientist who made significant contributions to computational psychology and other areas of cognitive science and artificial intelligence. He is currently professor of cognitive sciences at Rensselaer Polytechnic Institute, and formerly the James C. Dowell Professor of Engineering and Professor of Computer Science at University of Missouri. He received his Ph.D. in 1992 from Brandeis University.

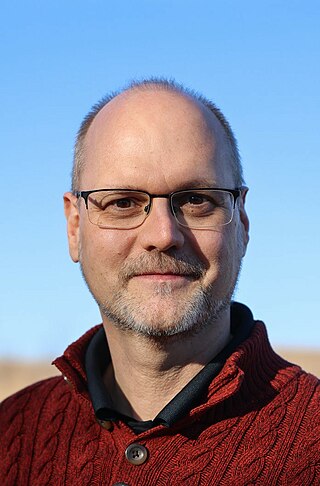

Artur d'Avila Garcez is a researcher in the field of computational logic and neural computation, in particular hybrid systems with application in software verification and information extraction. His contributions include neural-symbolic learning systems and nonclassical models of computation combining robust learning and reasoning. He is a Professor of Computer Science at City, University London.

In the philosophy of artificial intelligence, GOFAI is classical symbolic AI, as opposed to other approaches, such as neural networks, situated robotics, narrow symbolic AI or neuro-symbolic AI. The term was coined by philosopher John Haugeland in his 1985 book Artificial Intelligence: The Very Idea.

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence, its sub-disciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.

In artificial intelligence, a differentiable neural computer (DNC) is a memory augmented neural network architecture (MANN), which is typically recurrent in its implementation. The model was published in 2016 by Alex Graves et al. of DeepMind.

Explainable AI (XAI), often overlapping with Interpretable AI, or Explainable Machine Learning (XML), either refers to an artificial intelligence (AI) system over which it is possible for humans to retain intellectual oversight, or refers to the methods to achieve this. The main focus is usually on the reasoning behind the decisions or predictions made by the AI which are made more understandable and transparent. XAI counters the "black box" tendency of machine learning, where even the AI's designers cannot explain why it arrived at a specific decision.

Pascal Hitzler is a German American computer scientist specializing in Semantic Web and Artificial Intelligence. He is endowed Lloyd T. Smith Creativity in Engineering Chair, one of the Directors of the Institute for Digital Agriculture and Advanced Analytics (ID3A) and Director of the Center for Artificial Intelligence and Data Science (CAIDS) at Kansas State University, and the founding Editor-in-Chief of the Semantic Web journal and the IOS Press book series Studies on the Semantic Web.