Organization development (OD) is the study and implementation of practices, systems, and techniques that affect organizational change. The goal of which is to modify an organization's performance and/or culture. The organizational changes are typically initiated by the group's stakeholders. OD emerged from human relations studies in the 1930s, during which psychologists realized that organizational structures and processes influence worker behavior and motivation. More recently, work on OD has expanded to focus on aligning organizations with their rapidly changing and complex environments through organizational learning, knowledge management, and transformation of organizational norms and values. Key concepts of OD theory include: organizational climate, organizational culture and organizational strategies.

Information Processing Language (IPL) is a programming language created by Allen Newell, Cliff Shaw, and Herbert A. Simon at RAND Corporation and the Carnegie Institute of Technology about 1956. Newell had the job of language specifier-application programmer, Shaw was the system programmer, and Simon had the job of application programmer-user.

In the history of artificial intelligence, symbolic artificial intelligence is the term for the collection of all methods in artificial intelligence research that are based on high-level symbolic (human-readable) representations of problems, logic and search. Symbolic AI used tools such as logic programming, production rules, semantic nets and frames, and it developed applications such as expert systems.

A modeling language is any artificial language that can be used to express information or knowledge or systems in a structure that is defined by a consistent set of rules. The rules are used for interpretation of the meaning of components in the structure.

Neat and scruffy are two contrasting approaches to artificial intelligence (AI) research. The distinction was made in the 70s and was a subject of discussion until the middle 80s. In the 1990s and 21st century AI research adopted "neat" approaches almost exclusively and these have proven to be the most successful.

Action semantics is a framework for the formal specification of semantics of programming languages invented by David Watt and Peter D. Mosses in the 1990s. It is a mixture of denotational, operational and algebraic semantics.

In artificial intelligence, knowledge-based agents draw on a pool of logical sentences to infer conclusions about the world. At the knowledge level, we only need to specify what the agent knows and what its goals are; a logical abstraction separate from details of implementation.

The principle of rationality was coined by Karl R. Popper in his Harvard Lecture of 1963, and published in his book Myth of Framework. It is related to what he called the 'logic of the situation' in an Economica article of 1944/1945, published later in his book The Poverty of Historicism. According to Popper’s rationality principle, agents act in the most adequate way according to the objective situation. It is an idealized conception of human behavior which he used to drive his model of situational analysis.

Soar is a cognitive architecture, originally created by John Laird, Allen Newell, and Paul Rosenbloom at Carnegie Mellon University. It is now maintained and developed by John Laird's research group at the University of Michigan.

The intentional stance is a term coined by philosopher Daniel Dennett for the level of abstraction in which we view the behavior of an entity in terms of mental properties. It is part of a theory of mental content proposed by Dennett, which provides the underpinnings of his later works on free will, consciousness, folk psychology, and evolution.

Here is how it works: first you decide to treat the object whose behavior is to be predicted as a rational agent; then you figure out what beliefs that agent ought to have, given its place in the world and its purpose. Then you figure out what desires it ought to have, on the same considerations, and finally you predict that this rational agent will act to further its goals in the light of its beliefs. A little practical reasoning from the chosen set of beliefs and desires will in most instances yield a decision about what the agent ought to do; that is what you predict the agent will do.

A physical symbol system takes physical patterns (symbols), combining them into structures (expressions) and manipulating them to produce new expressions.

In artificial intelligence, an intelligent agent (IA) is anything which perceives its environment, takes actions autonomously in order to achieve goals, and may improve its performance with learning or may use knowledge. They may be simple or complex — a thermostat is considered an example of an intelligent agent, as is a human being, as is any system that meets the definition, such as a firm, a state, or a biome.

The history of Artificial Intelligence (AI) began in antiquity, with myths, stories and rumors of artificial beings endowed with intelligence or consciousness by master craftsmen. The seeds of modern AI were planted by classical philosophers who attempted to describe the process of human thinking as the mechanical manipulation of symbols. This work culminated in the invention of the programmable digital computer in the 1940s, a machine based on the abstract essence of mathematical reasoning. This device and the ideas behind it inspired a handful of scientists to begin seriously discussing the possibility of building an electronic brain.

A modeling perspective in information systems is a particular way to represent pre-selected aspects of a system. Any perspective has a different focus, conceptualization, dedication and visualization of what the model is representing.

A production system is a computer program typically used to provide some form of artificial intelligence, which consists primarily of a set of rules about behavior but it also includes the mechanism necessary to follow those rules as the system responds to states of the world. Those rules, termed productions, are a basic representation found useful in automated planning, expert systems and action selection.

Hubert Dreyfus was a critic of artificial intelligence research. In a series of papers and books, including Alchemy and AI (1965), What Computers Can't Do and Mind over Machine (1986), he presented a pessimistic assessment of AI's progress and a critique of the philosophical foundations of the field. Dreyfus' objections are discussed in most introductions to the philosophy of artificial intelligence, including Russell & Norvig (2003), the standard AI textbook, and in Fearn (2007), a survey of contemporary philosophy.

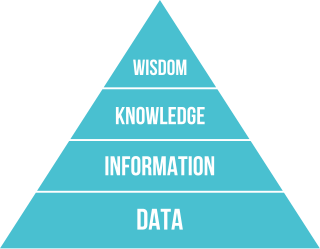

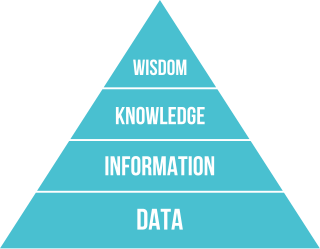

The DIKW pyramid, also known variously as the DIKW hierarchy, wisdom hierarchy, knowledge hierarchy, information hierarchy, information pyramid, and the data pyramid, refers loosely to a class of models for representing purported structural and/or functional relationships between data, information, knowledge, and wisdom. "Typically information is defined in terms of data, knowledge in terms of information, and wisdom in terms of knowledge".

Nouvelle artificial intelligence (AI) is an approach to artificial intelligence pioneered in the 1980s by Rodney Brooks, who was then part of MIT artificial intelligence laboratory. Nouvelle AI differs from classical AI by aiming to produce robots with intelligence levels similar to insects. Researchers believe that intelligence can emerge organically from simple behaviors as these intelligences interacted with the "real world," instead of using the constructed worlds which symbolic AIs typically needed to have programmed into them.

GOAL is an agent programming language for programming cognitive agents. GOAL agents derive their choice of action from their beliefs and goals. The language provides the basic building blocks to design and implement cognitive agents by programming constructs that allow and facilitate the manipulation of an agent's beliefs and goals and to structure its decision-making. The language provides an intuitive programming framework based on common sense or practical reasoning.