Digital signal processing (DSP) is the use of digital processing, such as by computers or more specialized digital signal processors, to perform a wide variety of signal processing operations. The digital signals processed in this manner are a sequence of numbers that represent samples of a continuous variable in a domain such as time, space, or frequency. In digital electronics, a digital signal is represented as a pulse train, which is typically generated by the switching of a transistor.

Microscopy is the technical field of using microscopes to view objects and areas of objects that cannot be seen with the naked eye. There are three well-known branches of microscopy: optical, electron, and scanning probe microscopy, along with the emerging field of X-ray microscopy.

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing signals, such as sound, images, potential fields, seismic signals, altimetry processing, and scientific measurements. Signal processing techniques are used to optimize transmissions, digital storage efficiency, correcting distorted signals, subjective video quality, and to also detect or pinpoint components of interest in a measured signal.

Fourier-transform spectroscopy is a measurement technique whereby spectra are collected based on measurements of the coherence of a radiative source, using time-domain or space-domain measurements of the radiation, electromagnetic or not. It can be applied to a variety of types of spectroscopy including optical spectroscopy, infrared spectroscopy, nuclear magnetic resonance (NMR) and magnetic resonance spectroscopic imaging (MRSI), mass spectrometry and electron spin resonance spectroscopy.

A wavelet is a wave-like oscillation with an amplitude that begins at zero, increases or decreases, and then returns to zero one or more times. Wavelets are termed a "brief oscillation". A taxonomy of wavelets has been established, based on the number and direction of its pulses. Wavelets are imbued with specific properties that make them useful for signal processing.

Digital image processing is the use of a digital computer to process digital images through an algorithm. As a subcategory or field of digital signal processing, digital image processing has many advantages over analog image processing. It allows a much wider range of algorithms to be applied to the input data and can avoid problems such as the build-up of noise and distortion during processing. Since images are defined over two dimensions digital image processing may be modeled in the form of multidimensional systems. The generation and development of digital image processing are mainly affected by three factors: first, the development of computers; second, the development of mathematics ; third, the demand for a wide range of applications in environment, agriculture, military, industry and medical science has increased.

A spectrogram is a visual representation of the spectrum of frequencies of a signal as it varies with time. When applied to an audio signal, spectrograms are sometimes called sonographs, voiceprints, or voicegrams. When the data are represented in a 3D plot they may be called waterfall displays.

In mathematics, deconvolution is the operation inverse to convolution. Both operations are used in signal processing and image processing. For example, it may be possible to recover the original signal after a filter (convolution) by using a deconvolution method with a certain degree of accuracy. Due to the measurement error of the recorded signal or image, it can be demonstrated that the worse the signal-to-noise ratio (SNR), the worse the reversing of a filter will be; hence, inverting a filter is not always a good solution as the error amplifies. Deconvolution offers a solution to this problem.

In signal processing, a signal is a function that conveys information about a phenomenon. Any quantity that can vary over space or time can be used as a signal to share messages between observers. The IEEE Transactions on Signal Processing includes audio, video, speech, image, sonar, and radar as examples of signals. A signal may also be defined as any observable change in a quantity over space or time, even if it does not carry information.

Computational photography refers to digital image capture and processing techniques that use digital computation instead of optical processes. Computational photography can improve the capabilities of a camera, or introduce features that were not possible at all with film-based photography, or reduce the cost or size of camera elements. Examples of computational photography include in-camera computation of digital panoramas, high-dynamic-range images, and light field cameras. Light field cameras use novel optical elements to capture three dimensional scene information which can then be used to produce 3D images, enhanced depth-of-field, and selective de-focusing. Enhanced depth-of-field reduces the need for mechanical focusing systems. All of these features use computational imaging techniques.

The following outline is provided as an overview of and topical guide to electrical engineering.

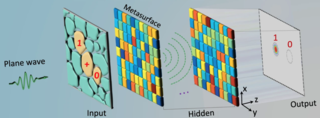

An optical neural network is a physical implementation of an artificial neural network with optical components. Early optical neural networks used a photorefractive Volume hologram to interconnect arrays of input neurons to arrays of output with synaptic weights in proportion to the multiplexed hologram's strength. Volume holograms were further multiplexed using spectral hole burning to add one dimension of wavelength to space to achieve four dimensional interconnects of two dimensional arrays of neural inputs and outputs. This research led to extensive research on alternative methods using the strength of the optical interconnect for implementing neuronal communications.

Optical computing or photonic computing uses light waves produced by lasers or incoherent sources for data processing, data storage or data communication for computing. For decades, photons have shown promise to enable a higher bandwidth than the electrons used in conventional computers.

Computer-generated holography (CGH) is the method of digitally generating holographic interference patterns. A holographic image can be generated e.g., by digitally computing a holographic interference pattern and printing it onto a mask or film for subsequent illumination by suitable coherent light source.

Fourier-transform infrared spectroscopy (FTIR) is a technique used to obtain an infrared spectrum of absorption or emission of a solid, liquid, or gas. An FTIR spectrometer simultaneously collects high-resolution spectral data over a wide spectral range. This confers a significant advantage over a dispersive spectrometer, which measures intensity over a narrow range of wavelengths at a time.

The time-stretch analog-to-digital converter (TS-ADC), also known as the time-stretch enhanced recorder (TiSER), is an analog-to-digital converter (ADC) system that has the capability of digitizing very high bandwidth signals that cannot be captured by conventional electronic ADCs. Alternatively, it is also known as the photonic time-stretch (PTS) digitizer, since it uses an optical frontend. It relies on the process of time-stretch, which effectively slows down the analog signal in time before it can be digitized by a standard electronic ADC.

Time Stretch Microscopy also known as Serial time-encoded amplified imaging/microscopy or stretched time-encoded amplified imaging/microscopy' (STEAM) is a fast real-time optical imaging method that provides MHz frame rate, ~100 ps shutter speed, and ~30 dB optical image gain. Based on the Photonic Time Stretch technique, STEAM holds world records for shutter speed and frame rate in continuous real-time imaging. STEAM employs the Photonic Time Stretch with internal Raman amplification to realize optical image amplification to circumvent the fundamental trade-off between sensitivity and speed that affects virtually all optical imaging and sensing systems. This method uses a single-pixel photodetector, eliminating the need for the detector array and readout time limitations. Avoiding this problem and featuring the optical image amplification for dramatic improvement in sensitivity at high image acquisition rates, STEAM's shutter speed is at least 1000 times faster than the state - of - the - art CCD and CMOS cameras. Its frame rate is 1000 times faster than fastest CCD cameras and 10 - 100 times faster than fastest CMOS cameras.

Time stretch dispersive Fourier transform (TS-DFT), otherwise known as time-stretch transform (TST), temporal Fourier transform or photonic time-stretch (PTS) is a spectroscopy technique that uses optical dispersion instead of a grating or prism to separate the light wavelengths and analyze the optical spectrum in real-time. It employs group-velocity dispersion (GVD) to transform the spectrum of a broadband optical pulse into a time stretched temporal waveform. It is used to perform Fourier transformation on an optical signal on a single shot basis and at high frame rates for real-time analysis of fast dynamic processes. It replaces a diffraction grating and detector array with a dispersive fiber and single-pixel detector, enabling ultrafast real-time spectroscopy and imaging. Its nonuniform variant, warped-stretch transform, realized with nonlinear group delay, offers variable-rate spectral domain sampling, as well as the ability to engineer the time-bandwidth product of the signal's envelope to match that of the data acquisition systems acting as an information gearbox.

An anamorphic stretch transform (AST) also referred to as warped stretch transform is a physics-inspired signal transform that emerged from time stretch dispersive Fourier transform. The transform can be applied to analog temporal signals such as communication signals, or to digital spatial data such as images. The transform reshapes the data in such a way that its output has properties conducive for data compression and analytics. The reshaping consists of warped stretching in the Fourier domain. The name "Anamorphic" is used because of the metaphoric analogy between the warped stretch operation and warping of images in anamorphosis and surrealist artworks.

Single-shot multi-contrast x-ray imaging is an efficient and a robust x-ray imaging technique which is used to obtain three different and complementary types of information, i.e. absorption, scattering, and phase contrast from a single exposure of x-rays on a detector subsequently utilizing Fourier analysis/technique. Absorption is mainly due to the attenuation and Compton scattering from the object, while phase contrast corresponds to phase shift of x-rays.