A meta-analysis is a statistical analysis that combines the results of multiple scientific studies. Meta-analyses can be performed when there are multiple scientific studies addressing the same question, with each individual study reporting measurements that are expected to have some degree of error. The aim then is to use approaches from statistics to derive a pooled estimate closest to the unknown common truth based on how this error is perceived. It is thus a basic methodology of Metascience. Meta-analytic results are considered the most trustworthy source of evidence by the evidence-based medicine literature.

A randomized controlled trial is a form of scientific experiment used to control factors not under direct experimental control. Examples of RCTs are clinical trials that compare the effects of drugs, surgical techniques, medical devices, diagnostic procedures or other medical treatments.

Clinical trials are prospective biomedical or behavioral research studies on human participants designed to answer specific questions about biomedical or behavioral interventions, including new treatments and known interventions that warrant further study and comparison. Clinical trials generate data on dosage, safety and efficacy. They are conducted only after they have received health authority/ethics committee approval in the country where approval of the therapy is sought. These authorities are responsible for vetting the risk/benefit ratio of the trial—their approval does not mean the therapy is 'safe' or effective, only that the trial may be conducted.

In a blind or blinded experiment, information which may influence the participants of the experiment is withheld until after the experiment is complete. Good blinding can reduce or eliminate experimental biases that arise from a participants' expectations, observer's effect on the participants, observer bias, confirmation bias, and other sources. A blind can be imposed on any participant of an experiment, including subjects, researchers, technicians, data analysts, and evaluators. In some cases, while blinding would be useful, it is impossible or unethical. For example, it is not possible to blind a patient to their treatment in a physical therapy intervention. A good clinical protocol ensures that blinding is as effective as possible within ethical and practical constraints.

Selection bias is the bias introduced by the selection of individuals, groups, or data for analysis in such a way that proper randomization is not achieved, thereby failing to ensure that the sample obtained is representative of the population intended to be analyzed. It is sometimes referred to as the selection effect. The phrase "selection bias" most often refers to the distortion of a statistical analysis, resulting from the method of collecting samples. If the selection bias is not taken into account, then some conclusions of the study may be false.

In published academic research, publication bias occurs when the outcome of an experiment or research study biases the decision to publish or otherwise distribute it. Publishing only results that show a significant finding disturbs the balance of findings in favor of positive results. The study of publication bias is an important topic in metascience.

Field experiments are experiments carried out outside of laboratory settings.

In statistics, imputation is the process of replacing missing data with substituted values. When substituting for a data point, it is known as "unit imputation"; when substituting for a component of a data point, it is known as "item imputation". There are three main problems that missing data causes: missing data can introduce a substantial amount of bias, make the handling and analysis of the data more arduous, and create reductions in efficiency. Because missing data can create problems for analyzing data, imputation is seen as a way to avoid pitfalls involved with listwise deletion of cases that have missing values. That is to say, when one or more values are missing for a case, most statistical packages default to discarding any case that has a missing value, which may introduce bias or affect the representativeness of the results. Imputation preserves all cases by replacing missing data with an estimated value based on other available information. Once all missing values have been imputed, the data set can then be analysed using standard techniques for complete data. There have been many theories embraced by scientists to account for missing data but the majority of them introduce bias. A few of the well known attempts to deal with missing data include: hot deck and cold deck imputation; listwise and pairwise deletion; mean imputation; non-negative matrix factorization; regression imputation; last observation carried forward; stochastic imputation; and multiple imputation.

The number needed to treat (NNT) or number needed to treat for an additional beneficial outcome (NNTB) is an epidemiological measure used in communicating the effectiveness of a health-care intervention, typically a treatment with medication. The NNT is the average number of patients who need to be treated to prevent one additional bad outcome. It is defined as the inverse of the absolute risk reduction, and computed as , where is the incidence in the treated (exposed) group, and is the incidence in the control (unexposed) group. This calculation implicitly assumes monotonicity, that is, no individual can be harmed by treatment. The modern approach, based on counterfactual conditionals, relaxes this assumption and yields bounds on NNT.

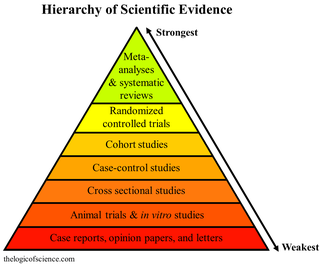

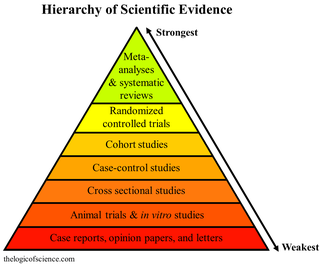

A hierarchy of evidence, comprising levels of evidence (LOEs), that is, evidence levels (ELs), is a heuristic used to rank the relative strength of results obtained from experimental research, especially medical research. There is broad agreement on the relative strength of large-scale, epidemiological studies. More than 80 different hierarchies have been proposed for assessing medical evidence. The design of the study and the endpoints measured affect the strength of the evidence. In clinical research, the best evidence for treatment efficacy is mainly from meta-analyses of randomized controlled trials (RCTs). Systematic reviews of completed, high-quality randomized controlled trials – such as those published by the Cochrane Collaboration – rank the same as systematic review of completed high-quality observational studies in regard to the study of side effects. Evidence hierarchies are often applied in evidence-based practices and are integral to evidence-based medicine (EBM).

In medicine an intention-to-treat (ITT) analysis of the results of a randomized controlled trial is based on the initial treatment assignment and not on the treatment eventually received. ITT analysis is intended to avoid various misleading artifacts that can arise in intervention research such as non-random attrition of participants from the study or crossover. ITT is also simpler than other forms of study design and analysis, because it does not require observation of compliance status for units assigned to different treatments or incorporation of compliance into the analysis. Although ITT analysis is widely employed in published clinical trials, it can be incorrectly described and there are some issues with its application. Furthermore, there is no consensus on how to carry out an ITT analysis in the presence of missing outcome data.

In fields such as epidemiology, social sciences, psychology and statistics, an observational study draws inferences from a sample to a population where the independent variable is not under the control of the researcher because of ethical concerns or logistical constraints. One common observational study is about the possible effect of a treatment on subjects, where the assignment of subjects into a treated group versus a control group is outside the control of the investigator. This is in contrast with experiments, such as randomized controlled trials, where each subject is randomly assigned to a treated group or a control group. Observational studies, for lacking an assignment mechanism, naturally present difficulties for inferential analysis.

In epidemiology, reporting bias is defined as "selective revealing or suppression of information" by subjects. In artificial intelligence research, the term reporting bias is used to refer to people's tendency to under-report all the information available.

In statistics, missing data, or missing values, occur when no data value is stored for the variable in an observation. Missing data are a common occurrence and can have a significant effect on the conclusions that can be drawn from the data.

In natural and social science research, a protocol is most commonly a predefined procedural method in the design and implementation of an experiment. Protocols are written whenever it is desirable to standardize a laboratory method to ensure successful replication of results by others in the same laboratory or by other laboratories. Additionally, and by extension, protocols have the advantage of facilitating the assessment of experimental results through peer review. In addition to detailed procedures, equipment, and instruments, protocols will also contain study objectives, reasoning for experimental design, reasoning for chosen sample sizes, safety precautions, and how results were calculated and reported, including statistical analysis and any rules for predefining and documenting excluded data to avoid bias.

Impact evaluation assesses the changes that can be attributed to a particular intervention, such as a project, program or policy, both the intended ones, as well as ideally the unintended ones. In contrast to outcome monitoring, which examines whether targets have been achieved, impact evaluation is structured to answer the question: how would outcomes such as participants' well-being have changed if the intervention had not been undertaken? This involves counterfactual analysis, that is, "a comparison between what actually happened and what would have happened in the absence of the intervention." Impact evaluations seek to answer cause-and-effect questions. In other words, they look for the changes in outcome that are directly attributable to a program.

Repeated measures design is a research design that involves multiple measures of the same variable taken on the same or matched subjects either under different conditions or over two or more time periods. For instance, repeated measurements are collected in a longitudinal study in which change over time is assessed.

In medicine, a stepped-wedge trial is a type of randomised controlled trial (RCT). An RCT is a scientific experiment that is designed to reduce bias when testing a new medical treatment, a social intervention, or another testable hypothesis.

Roderick Joseph Alexander Little is an academic statistician, whose main research contributions lie in the statistical analysis of data with missing values and the analysis of complex sample survey data. Little is Richard D. Remington Distinguished University Professor of Biostatistics in the Department of Biostatistics at the University of Michigan, where he also holds academic appointments in the Department of Statistics and the Institute for Social Research.

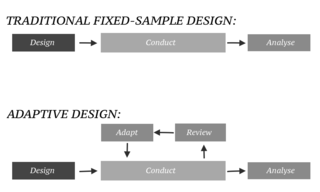

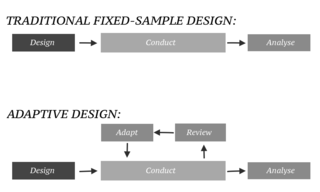

In an adaptive design of a clinical trial, the parameters and conduct of the trial for a candidate drug or vaccine may be changed based on an interim analysis. Adaptive design typically involves advanced statistics to interpret a clinical trial endpoint. This is in contrast to traditional single-arm clinical trials or randomized clinical trials (RCTs) that are static in their protocol and do not modify any parameters until the trial is completed. The adaptation process takes place at certain points in the trial, prescribed in the trial protocol. Importantly, this trial protocol is set before the trial begins with the adaptation schedule and processes specified. Adaptions may include modifications to: dosage, sample size, drug undergoing trial, patient selection criteria and/or "cocktail" mix. The PANDA provides not only a summary of different adaptive designs, but also comprehensive information on adaptive design planning, conduct, analysis and reporting.