The gambler's fallacy, also known as the Monte Carlo fallacy or the fallacy of the maturity of chances, is the belief that, if an event has occurred more frequently than expected, it is less likely to happen again in the future. The fallacy is commonly associated with gambling, where it may be believed, for example, that the next dice roll is more than usually likely to be six because there have recently been fewer than the expected number of sixes.

In statistics, sampling bias is a bias in which a sample is collected in such a way that some members of the intended population have a lower or higher sampling probability than others. It results in a biased sample of a population in which all individuals, or instances, were not equally likely to have been selected. If this is not accounted for, results can be erroneously attributed to the phenomenon under study rather than to the method of sampling.

Statistics is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.

A ganzfeld experiment is an assessment used by parapsychologists that they contend can test for extrasensory perception (ESP) or telepathy. In these experiments, a "sender" attempts to mentally transmit an image to a "receiver" who is in a state of sensory deprivation. The receiver is normally asked to choose between a limited number of options for what the transmission was supposed to be and parapsychologists who propose that such telepathy is possible argue that rates of success above the expectation from randomness are evidence for ESP. Consistent, independent replication of ganzfeld experiments has not been achieved, and, in spite of strenuous arguments by parapsychologists to the contrary, there is no validated evidence accepted by the wider scientific community for the existence of any parapsychological phenomena. Ongoing parapsychology research using ganzfeld experiments has been criticized by independent reviewers as having the hallmarks of pseudoscience.

Post hoc ergo propter hoc is an informal fallacy which one commits when one reasons, "Since event Y followed event X, event Y must have been caused by event X." Its a fallacy in which an event is presumed to have been caused by a closely preceding event merely on the grounds of temporal succession. This type of reasoning is fallacious because mere temporal succession does not establish a causal connection. It is often shortened simply to post hoc fallacy. A logical fallacy of the questionable cause variety, it is subtly different from the fallacy cum hoc ergo propter hoc, in which two events occur simultaneously or the chronological ordering is insignificant or unknown. Post hoc is a logical fallacy in which one event seems to be the cause of a later event because it occurred earlier.

Statistical bias, in the mathematical field of statistics, is a systematic tendency in which the methods used to gather data and generate statistics present an inaccurate, skewed or biased depiction of reality. Statistical bias exists in numerous stages of the data collection and analysis process, including: the source of the data, the methods used to collect the data, the estimator chosen, and the methods used to analyze the data. Data analysts can take various measures at each stage of the process to reduce the impact of statistical bias in their work. Understanding the source of statistical bias can help to assess whether the observed results are close to actuality. Issues of statistical bias has been argued to be closely linked to issues of statistical validity.

Cherry picking, suppressing evidence, or the fallacy of incomplete evidence is the act of pointing to individual cases or data that seem to confirm a particular position while ignoring a significant portion of related and similar cases or data that may contradict that position. Cherry picking may be committed intentionally or unintentionally.

Selection bias is the bias introduced by the selection of individuals, groups, or data for analysis in such a way that proper randomization is not achieved, thereby failing to ensure that the sample obtained is representative of the population intended to be analyzed. It is sometimes referred to as the selection effect. The phrase "selection bias" most often refers to the distortion of a statistical analysis, resulting from the method of collecting samples. If the selection bias is not taken into account, then some conclusions of the study may be false.

Anecdotal evidence is evidence based only on personal observation, collected in a casual or non-systematic manner.

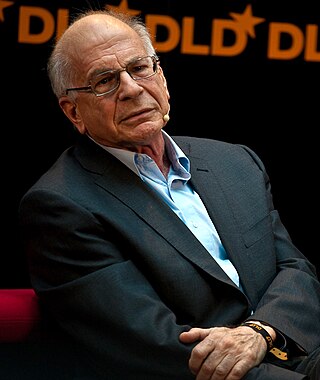

The representativeness heuristic is used when making judgments about the probability of an event being representional in character and essence of a known prototypical event. It is one of a group of heuristics proposed by psychologists Amos Tversky and Daniel Kahneman in the early 1970s as "the degree to which [an event] (i) is similar in essential characteristics to its parent population, and (ii) reflects the salient features of the process by which it is generated". The representativeness heuristic works by comparing an event to a prototype or stereotype that we already have in mind. For example, if we see a person who is dressed in eccentric clothes and reading a poetry book, we might be more likely to think that they are a poet than an accountant. This is because the person's appearance and behavior are more representative of the stereotype of a poet than an accountant.

The planning fallacy is a phenomenon in which predictions about how much time will be needed to complete a future task display an optimism bias and underestimate the time needed. This phenomenon sometimes occurs regardless of the individual's knowledge that past tasks of a similar nature have taken longer to complete than generally planned. The bias affects predictions only about one's own tasks. On the other hand, when outside observers predict task completion times, they tend to exhibit a pessimistic bias, overestimating the time needed. The planning fallacy involves estimates of task completion times more optimistic than those encountered in similar projects in the past.

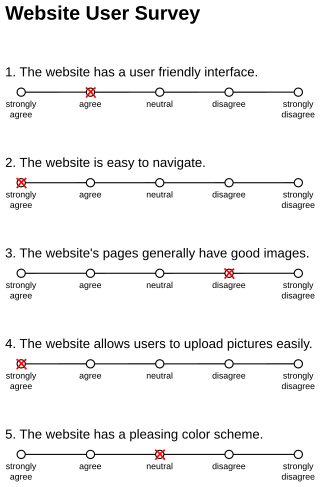

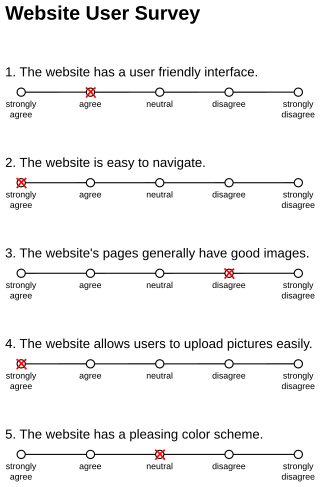

Response bias is a general term for a wide range of tendencies for participants to respond inaccurately or falsely to questions. These biases are prevalent in research involving participant self-report, such as structured interviews or surveys. Response biases can have a large impact on the validity of questionnaires or surveys.

Statistics, when used in a misleading fashion, can trick the casual observer into believing something other than what the data shows. That is, a misuse of statistics occurs when a statistical argument asserts a falsehood. In some cases, the misuse may be accidental. In others, it is purposeful and for the gain of the perpetrator. When the statistical reason involved is false or misapplied, this constitutes a statistical fallacy.

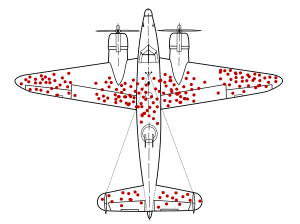

Abraham Wald was a Jewish Hungarian mathematician who contributed to decision theory, geometry and econometrics, and founded the field of sequential analysis. One of his well-known statistical works was written during World War II on how to minimize the damage to bomber aircraft and took into account the survivorship bias in his calculations. He spent his research career at Columbia University. He was the grandson of Rabbi Moshe Shmuel Glasner.

The overconfidence effect is a well-established bias in which a person's subjective confidence in their judgments is reliably greater than the objective accuracy of those judgments, especially when confidence is relatively high. Overconfidence is one example of a miscalibration of subjective probabilities. Throughout the research literature, overconfidence has been defined in three distinct ways: (1) overestimation of one's actual performance; (2) overplacement of one's performance relative to others; and (3) overprecision in expressing unwarranted certainty in the accuracy of one's beliefs.

In social psychology, illusory superiority is a cognitive bias wherein people overestimate their own qualities and abilities compared to others. Illusory superiority is one of many positive illusions, relating to the self, that are evident in the study of intelligence, the effective performance of tasks and tests, and the possession of desirable personal characteristics and personality traits. Overestimation of abilities compared to an objective measure is known as the overconfidence effect.

The "hot hand" is a phenomenon, previously considered a cognitive social bias, that a person who experiences a successful outcome has a greater chance of success in further attempts. The concept is often applied to sports and skill-based tasks in general and originates from basketball, where a shooter is more likely to score if their previous attempts were successful; i.e., while having the "hot hand.” While previous success at a task can indeed change the psychological attitude and subsequent success rate of a player, researchers for many years did not find evidence for a "hot hand" in practice, dismissing it as fallacious. However, later research questioned whether the belief is indeed a fallacy. Some recent studies using modern statistical analysis have observed evidence for the "hot hand" in some sporting activities; however, other recent studies have not observed evidence of the "hot hand". Moreover, evidence suggests that only a small subset of players may show a "hot hand" and, among those who do, the magnitude of the "hot hand" tends to be small.

Funding bias, also known as sponsorship bias, funding outcome bias, funding publication bias, and funding effect, refers to the tendency of a scientific study to support the interests of the study's financial sponsor. This phenomenon is recognized sufficiently that researchers undertake studies to examine bias in past published studies. Funding bias has been associated, in particular, with research into chemical toxicity, tobacco, and pharmaceutical drugs. It is an instance of experimenter's bias.

Success is the state or condition of meeting a defined range of expectations. It may be viewed as the opposite of failure. The criteria for success depend on context, and may be relative to a particular observer or belief system. One person might consider a success what another person considers a failure, particularly in cases of direct competition or a zero-sum game. Similarly, the degree of success or failure in a situation may be differently viewed by distinct observers or participants, such that a situation that one considers to be a success, another might consider to be a failure, a qualified success or a neutral situation. For example, a film that is a commercial failure or even a box-office bomb can go on to receive a cult following, with the initial lack of commercial success even lending a cachet of subcultural coolness.