Slashdot is a social news website that originally billed itself as "News for Nerds. Stuff that Matters". It features news stories on science, technology, and politics that are submitted and evaluated by site users and editors. Each story has a comments section where users can add online comments.

An Internet forum, or message board, is an online discussion site where people can hold conversations in the form of posted messages. They differ from chat rooms in that messages are often longer than one line of text, and are at least temporarily archived. Also, depending on the access level of a user or the forum set-up, a posted message might need to be approved by a moderator before it becomes publicly visible.

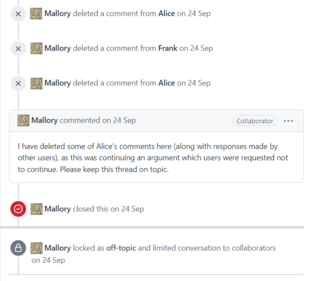

Reddit is an American social news aggregation, content rating, and forum social network. Registered users submit content to the site such as links, text posts, images, and videos, which are then voted up or down by other members. Posts are organized by subject into user-created boards called "communities" or "subreddits". Submissions with more upvotes appear towards the top of their subreddit and, if they receive enough upvotes, ultimately on the site's front page. Reddit administrators moderate the communities. Moderation is also conducted by community-specific moderators, who are not Reddit employees. It is operated by Reddit, Inc., based in San Francisco.

The Center for Countering Digital Hate (CCDH), formerly Brixton Endeavors, is a British not-for-profit NGO company with offices in London and Washington, D.C. with the stated purpose of stopping the spread of online hate speech and disinformation. It campaigns to deplatform people that it believes promote hate or misinformation, and campaigns to restrict media organisations such as The Daily Wire from advertising. CCDH is a member of the Stop Hate For Profit coalition.

X, commonly referred to by its former name Twitter, is a social media website based in the United States. With over 500 million users, it is one of the world's largest social networks and the fifth-most visited website in the world. Users can share text messages, images, and videos through short posts. X also includes direct messaging, video and audio calling, bookmarks, lists and communities, and Spaces, a social audio feature. Users can vote on context added by approved users using the Community Notes feature.

Facebook has been the subject of criticism and legal action since it was founded in 2004. Criticisms include the outsize influence Facebook has on the lives and health of its users and employees, as well as Facebook's influence on the way media, specifically news, is reported and distributed. Notable issues include Internet privacy, such as use of a widespread "like" button on third-party websites tracking users, possible indefinite records of user information, automatic facial recognition software, and its role in the workplace, including employer-employee account disclosure. The use of Facebook can have negative psychological and physiological effects that include feelings of sexual jealousy, stress, lack of attention, and social media addiction that in some cases is comparable to drug addiction.

On the Internet, a block or ban is a technical measure intended to restrict access to information or resources. Blocking and its inverse, unblocking, may be implemented by the owners of computers using software.

Odnoklassniki, abbreviated as OK or OK.ru, is a social network service used mainly in Russia and former Soviet Republics. The site was launched on March 4, 2006 by Albert Popkov and is currently owned by VK.

Facebook is a social networking service that has been gradually replacing traditional media channels since 2010. Facebook has limited moderation of the content posted to its site. Because the site indiscriminately displays material publicly posted by users, Facebook can, in effect, threaten oppressive governments. Facebook can simultaneously propagate fake news, hate speech, and misinformation, thereby undermining the credibility of online platforms and social media.

Censorship of Twitter, refers to Internet censorship by governments that block access to Twitter. Twitter censorship also includes governmental notice and take down requests to Twitter, which it enforces in accordance with its Terms of Service when a government or authority submits a valid removal request to Twitter indicating that specific content is illegal in their jurisdiction.

Instagram is a photo and video sharing social networking service owned by Meta Platforms. It allows users to upload media that can be edited with filters, be organized by hashtags, and be associated with a location via geographical tagging. Posts can be shared publicly or with preapproved followers. Users can browse other users' content by tags and locations, view trending content, like photos, and follow other users to add their content to a personal feed. A Meta-operated image-centric social media platform, it is available on iOS, Android, Windows 10, and the web. Users can take photos and edit them using built-in filters and other tools, then share them on other social media platforms like Facebook. It supports 32 languages including English, Spanish, French, Korean, and Japanese.

Google+ was a social network that was owned and operated by Google until it ceased operations in 2019. The network was launched on June 28, 2011, in an attempt to challenge other social networks, linking other Google products like Google Drive, Blogger and YouTube. The service, Google's fourth foray into social networking, experienced strong growth in its initial years, although usage statistics varied, depending on how the service was defined. Three Google executives oversaw the service, which underwent substantial changes that led to a redesign in November 2015.

Shadow banning, also called stealth banning, hellbanning, ghost banning, and comment ghosting, is the practice of blocking or partially blocking a user or the user's content from some areas of an online community in such a way that the ban is not readily apparent to the user, regardless of whether the action is taken by an individual or an algorithm. For example, shadow-banned comments posted to a blog or media website would be visible to the sender, but not to other users accessing the site.

The Babylon Bee is a conservative Christian news satire website that publishes satirical articles on topics including religion, politics, current events, and public figures. It has been referred to as a Christian or conservative version of The Onion.

Gab is an American alt-tech microblogging and social networking service known for its far-right userbase. Widely described as a haven for neo-Nazis, racists, white supremacists, white nationalists, antisemites, the alt-right, supporters of Donald Trump, conservatives, right-libertarians, and believers in conspiracy theories such as QAnon, Gab has attracted users and groups who have been banned from other social media platforms and users seeking alternatives to mainstream social media platforms. Founded in 2016 and launched publicly in May 2017, Gab claims to promote free speech, individual liberty, the "free flow of information online", and Christian values. Researchers and journalists have characterized these assertions as an obfuscation of its extremist ecosystem. Antisemitism is prominent in the site's content and the company itself has engaged in antisemitic commentary. Gab CEO Andrew Torba has promoted the white genocide conspiracy theory. Gab is based in Pennsylvania.

Mastodon is free and open-source software for running self-hosted social networking services. It has microblogging features similar to Twitter, which are offered by a large number of independently run nodes, known as instances or servers, each with its own code of conduct, terms of service, privacy policy, privacy options, and content moderation policies.

Deplatforming, also known as no-platforming, is a boycott on an individual or group by removing the platforms used to share their information or ideas. The term is commonly associated with social media.

Account verification is the process of verifying that a new or existing account is owned and operated by a specified real individual or organization. A number of websites, for example social media websites, offer account verification services. Verified accounts are often visually distinguished by check mark icons or badges next to the names of individuals or organizations.

The Twitter Files are a series of releases of select internal Twitter, Inc. documents published from December 2022 through March 2023 on Twitter. CEO Elon Musk gave the documents to journalists Matt Taibbi, Bari Weiss, Lee Fang, and authors Michael Shellenberger, David Zweig and Alex Berenson shortly after he acquired Twitter on October 27, 2022. Taibbi and Weiss coordinated the publication of the documents with Musk, releasing details of the files as a series of Twitter threads.

Elon Musk completed his acquisition of Twitter in October 2022; Musk acted as CEO of Twitter until June 2023 when he was succeeded by Linda Yaccarino. Twitter was then rebranded to X in July 2023. Initially during Musk's tenure, Twitter introduced a series of reforms and management changes; the company reinstated a number of previously banned accounts, reduced the workforce by approximately 80%, closed one of Twitter's three data centers, and largely eliminated the content moderation team, replacing it with the crowd-sourced fact-checking system Community Notes.