Flood fill, also called seed fill, is a flooding algorithm that determines and alters the area connected to a given node in a multi-dimensional array with some matching attribute. It is used in the "bucket" fill tool of paint programs to fill connected, similarly-colored areas with a different color, and in games such as Go and Minesweeper for determining which pieces are cleared. A variant called boundary fill uses the same algorithms but is defined as the area connected to a given node that does not have a particular attribute.

A minimum spanning tree (MST) or minimum weight spanning tree is a subset of the edges of a connected, edge-weighted undirected graph that connects all the vertices together, without any cycles and with the minimum possible total edge weight. That is, it is a spanning tree whose sum of edge weights is as small as possible. More generally, any edge-weighted undirected graph has a minimum spanning forest, which is a union of the minimum spanning trees for its connected components.

In digital image processing and computer vision, image segmentation is the process of partitioning a digital image into multiple image segments, also known as image regions or image objects. The goal of segmentation is to simplify and/or change the representation of an image into something that is more meaningful and easier to analyze. Image segmentation is typically used to locate objects and boundaries in images. More precisely, image segmentation is the process of assigning a label to every pixel in an image such that pixels with the same label share certain characteristics.

In computer vision or natural language processing, document layout analysis is the process of identifying and categorizing the regions of interest in the scanned image of a text document. A reading system requires the segmentation of text zones from non-textual ones and the arrangement in their correct reading order. Detection and labeling of the different zones as text body, illustrations, math symbols, and tables embedded in a document is called geometric layout analysis. But text zones play different logical roles inside the document and this kind of semantic labeling is the scope of the logical layout analysis.

Simple interactive object extraction (SIOX) is an algorithm for extracting foreground objects from color images and videos with very little user interaction. It has been implemented as "foreground selection" tool in the GIMP, as part of the tracer tool in Inkscape, and as function in ImageJ and Fiji (plug-in). Experimental implementations were also reported for Blender and Krita. Although the algorithm was originally designed for videos, virtually all implementations use SIOX primarily for still image segmentation. In fact, it is often said to be the current de facto standard for this task in the open-source world.

Scale-space segmentation or multi-scale segmentation is a general framework for signal and image segmentation, based on the computation of image descriptors at multiple scales of smoothing.

Connected-component labeling (CCL), connected-component analysis (CCA), blob extraction, region labeling, blob discovery, or region extraction is an algorithmic application of graph theory, where subsets of connected components are uniquely labeled based on a given heuristic. Connected-component labeling is not to be confused with segmentation.

Livewire, is a segmentation technique which allows a user to select regions of interest to be extracted quickly and accurately, using simple mouse clicks. It is based on the lowest cost path algorithm, by Edsger W. Dijkstra. Firstly convolve the image with a Sobel filter to extract edges. Each pixel of the resulting image is a vertex of the graph and has edges going to the 4 pixels around it, as up, down, left, right. The edge costs are defined based on a cost function. In 1995, Eric N. Mortensen and William A. Barrett made some extension work on livewire segmentation tool, which is known as Intelligent Scissors.

As applied in the field of computer vision, graph cut optimization can be employed to efficiently solve a wide variety of low-level computer vision problems, such as image smoothing, the stereo correspondence problem, image segmentation, object co-segmentation, and many other computer vision problems that can be formulated in terms of energy minimization. Many of these energy minimization problems can be approximated by solving a maximum flow problem in a graph. Under most formulations of such problems in computer vision, the minimum energy solution corresponds to the maximum a posteriori estimate of a solution. Although many computer vision algorithms involve cutting a graph, the term "graph cuts" is applied specifically to those models which employ a max-flow/min-cut optimization.

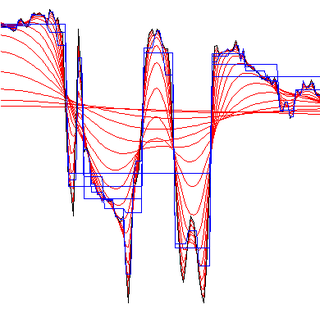

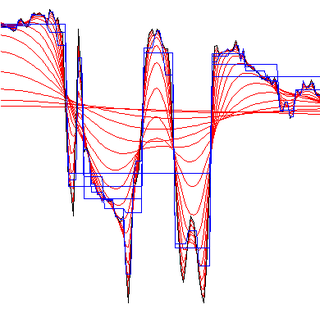

Active contour model, also called snakes, is a framework in computer vision introduced by Michael Kass, Andrew Witkin, and Demetri Terzopoulos for delineating an object outline from a possibly noisy 2D image. The snakes model is popular in computer vision, and snakes are widely used in applications like object tracking, shape recognition, segmentation, edge detection and stereo matching.

In multivariate statistics, spectral clustering techniques make use of the spectrum (eigenvalues) of the similarity matrix of the data to perform dimensionality reduction before clustering in fewer dimensions. The similarity matrix is provided as an input and consists of a quantitative assessment of the relative similarity of each pair of points in the dataset.

In computer vision, maximally stable extremal regions (MSER) are used as a method of blob detection in images. This technique was proposed by Matas et al. to find correspondences between image elements from two images with different viewpoints. This method of extracting a comprehensive number of corresponding image elements contributes to the wide-baseline matching, and it has led to better stereo matching and object recognition algorithms.

The image segmentation problem is concerned with partitioning an image into multiple regions according to some homogeneity criterion. This article is primarily concerned with graph theoretic approaches to image segmentation applying graph partitioning via minimum cut or maximum cut. Segmentation-based object categorization can be viewed as a specific case of spectral clustering applied to image segmentation.

Image segmentation strives to partition a digital image into regions of pixels with similar properties, e.g. homogeneity. The higher-level region representation simplifies image analysis tasks such as counting objects or detecting changes, because region attributes can be compared more readily than raw pixels.

The random walker algorithm is an algorithm for image segmentation. In the first description of the algorithm, a user interactively labels a small number of pixels with known labels, e.g., "object" and "background". The unlabeled pixels are each imagined to release a random walker, and the probability is computed that each pixel's random walker first arrives at a seed bearing each label, i.e., if a user places K seeds, each with a different label, then it is necessary to compute, for each pixel, the probability that a random walker leaving the pixel will first arrive at each seed. These probabilities may be determined analytically by solving a system of linear equations. After computing these probabilities for each pixel, the pixel is assigned to the label for which it is most likely to send a random walker. The image is modeled as a graph, in which each pixel corresponds to a node which is connected to neighboring pixels by edges, and the edges are weighted to reflect the similarity between the pixels. Therefore, the random walk occurs on the weighted graph.

In applied mathematics, lambda-connectedness deals with partial connectivity for a discrete space.

In the practice of digital image processing Alexandre X. Falcao, Jorge Stolfi, and Roberto de Alencar Lotufo have created and proven that the Image Foresting Transform (IFT) can be used as a time saver in processing 2-D, 3-D images, and moving images.

Graph cut optimization is a combinatorial optimization method applicable to a family of functions of discrete variables, named after the concept of cut in the theory of flow networks. Thanks to the max-flow min-cut theorem, determining the minimum cut over a graph representing a flow network is equivalent to computing the maximum flow over the network. Given a pseudo-Boolean function , if it is possible to construct a flow network with positive weights such that

Semi-global matching (SGM) is a computer vision algorithm for the estimation of a dense disparity map from a rectified stereo image pair, introduced in 2005 by Heiko Hirschmüller while working at the German Aerospace Center. Given its predictable run time, its favourable trade-off between quality of the results and computing time, and its suitability for fast parallel implementation in ASIC or FPGA, it has encountered wide adoption in real-time stereo vision applications such as robotics and advanced driver assistance systems.

Clément Farabet is a computer scientist and AI expert known for his contributions to the field of deep learning. He served as a research scientist at the New York University. He serves as the Vice President of Research at Google DeepMind and previously served as the VP of AI Infrastructure at NVIDIA.