In calculus, the chain rule is a formula that expresses the derivative of the composition of two differentiable functions f and g in terms of the derivatives of f and g. More precisely, if is the function such that for every x, then the chain rule is, in Lagrange's notation,

In mathematics, the derivative quantifies the sensitivity of change of a function's output with respect to its input. Derivatives are a fundamental tool of calculus. For example, the derivative of the position of a moving object with respect to time is the object's velocity: this measures how quickly the position of the object changes when time advances.

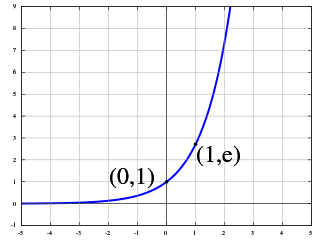

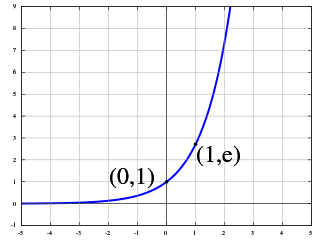

The exponential function is a mathematical function denoted by or . Unless otherwise specified, the term generally refers to the positive-valued function of a real variable, although it can be extended to the complex numbers or generalized to other mathematical objects like matrices or Lie algebras. The exponential function originated from the operation of taking powers of a number, but various modern definitions allow it to be rigorously extended to all real arguments , including irrational numbers. Its ubiquitous occurrence in pure and applied mathematics led mathematician Walter Rudin to consider the exponential function to be "the most important function in mathematics".

The natural logarithm of a number is its logarithm to the base of the mathematical constant e, which is an irrational and transcendental number approximately equal to 2.718281828459. The natural logarithm of x is generally written as ln x, logex, or sometimes, if the base e is implicit, simply log x. Parentheses are sometimes added for clarity, giving ln(x), loge(x), or log(x). This is done particularly when the argument to the logarithm is not a single symbol, so as to prevent ambiguity.

In calculus, Taylor's theorem gives an approximation of a -times differentiable function around a given point by a polynomial of degree , called the -th-order Taylor polynomial. For a smooth function, the Taylor polynomial is the truncation at the order of the Taylor series of the function. The first-order Taylor polynomial is the linear approximation of the function, and the second-order Taylor polynomial is often referred to as the quadratic approximation. There are several versions of Taylor's theorem, some giving explicit estimates of the approximation error of the function by its Taylor polynomial.

In mathematics, exponentiation is an operation involving two numbers, the base and the exponent or power. Exponentiation is written as bn, where b is the base and n is the power; this is pronounced as "b (raised) to the n". When n is a positive integer, exponentiation corresponds to repeated multiplication of the base: that is, bn is the product of multiplying n bases:

In calculus, the quotient rule is a method of finding the derivative of a function that is the ratio of two differentiable functions. Let , where both f and g are differentiable and The quotient rule states that the derivative of h(x) is

In mathematics, a multiplicative inverse or reciprocal for a number x, denoted by 1/x or x−1, is a number which when multiplied by x yields the multiplicative identity, 1. The multiplicative inverse of a fraction a/b is b/a. For the multiplicative inverse of a real number, divide 1 by the number. For example, the reciprocal of 5 is one fifth (1/5 or 0.2), and the reciprocal of 0.25 is 1 divided by 0.25, or 4. The reciprocal function, the function f(x) that maps x to 1/x, is one of the simplest examples of a function which is its own inverse (an involution).

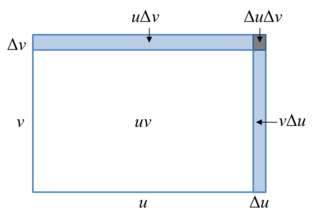

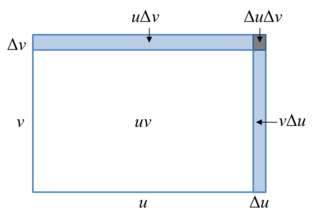

In calculus, the product rule is a formula used to find the derivatives of products of two or more functions. For two functions, it may be stated in Lagrange's notation as

In mathematics, the integral test for convergence is a method used to test infinite series of monotonous terms for convergence. It was developed by Colin Maclaurin and Augustin-Louis Cauchy and is sometimes known as the Maclaurin–Cauchy test.

In mathematics, the exponential function can be characterized in many ways. The following characterizations (definitions) are most common. This article discusses why each characterization makes sense, and why the characterizations are independent of and equivalent to each other. As a special case of these considerations, it will be demonstrated that the three most common definitions given for the mathematical constant e are equivalent to each other.

In mathematics, the total derivative of a function f at a point is the best linear approximation near this point of the function with respect to its arguments. Unlike partial derivatives, the total derivative approximates the function with respect to all of its arguments, not just a single one. In many situations, this is the same as considering all partial derivatives simultaneously. The term "total derivative" is primarily used when f is a function of several variables, because when f is a function of a single variable, the total derivative is the same as the ordinary derivative of the function.

In mathematical analysis, and applications in geometry, applied mathematics, engineering, and natural sciences, a function of a real variable is a function whose domain is the real numbers , or a subset of that contains an interval of positive length. Most real functions that are considered and studied are differentiable in some interval. The most widely considered such functions are the real functions, which are the real-valued functions of a real variable, that is, the functions of a real variable whose codomain is the set of real numbers.

In mathematics, the derivative is a fundamental construction of differential calculus and admits many possible generalizations within the fields of mathematical analysis, combinatorics, algebra, geometry, etc.

This is a summary of differentiation rules, that is, rules for computing the derivative of a function in calculus.

A product integral is any product-based counterpart of the usual sum-based integral of calculus. The first product integral was developed by the mathematician Vito Volterra in 1887 to solve systems of linear differential equations. Other examples of product integrals are the geometric integral, the bigeometric integral, and some other integrals of non-Newtonian calculus.

In mathematics, quaternionic analysis is the study of functions with quaternions as the domain and/or range. Such functions can be called functions of a quaternion variable just as functions of a real variable or a complex variable are called.

In mathematical analysis and its applications, a function of several real variables or real multivariate function is a function with more than one argument, with all arguments being real variables. This concept extends the idea of a function of a real variable to several variables. The "input" variables take real values, while the "output", also called the "value of the function", may be real or complex. However, the study of the complex-valued functions may be easily reduced to the study of the real-valued functions, by considering the real and imaginary parts of the complex function; therefore, unless explicitly specified, only real-valued functions will be considered in this article.

Most of the terms listed in Wikipedia glossaries are already defined and explained within Wikipedia itself. However, glossaries like this one are useful for looking up, comparing and reviewing large numbers of terms together. You can help enhance this page by adding new terms or writing definitions for existing ones.

In mathematics, calculus on Euclidean space is a generalization of calculus of functions in one or several variables to calculus of functions on Euclidean space as well as a finite-dimensional real vector space. This calculus is also known as advanced calculus, especially in the United States. It is similar to multivariable calculus but is somewhat more sophisticated in that it uses linear algebra more extensively and covers some concepts from differential geometry such as differential forms and Stokes' formula in terms of differential forms. This extensive use of linear algebra also allows a natural generalization of multivariable calculus to calculus on Banach spaces or topological vector spaces.