In calculus, the chain rule is a formula that expresses the derivative of the composition of two differentiable functions f and g in terms of the derivatives of f and g. More precisely, if is the function such that for every x, then the chain rule is, in Lagrange's notation,

In the field of complex analysis in mathematics, the Cauchy–Riemann equations, named after Augustin Cauchy and Bernhard Riemann, consist of a system of two partial differential equations which form a necessary and sufficient condition for a complex function of a complex variable to be complex differentiable.

In mathematics, the derivative shows the sensitivity of change of a function's output with respect to the input. Derivatives are a fundamental tool of calculus. For example, the derivative of the position of a moving object with respect to time is the object's velocity: this measures how quickly the position of the object changes when time advances.

In vector calculus, the gradient of a scalar-valued differentiable function of several variables is the vector field whose value at a point gives the direction and the rate of fastest increase. The gradient transforms like a vector under change of basis of the space of variables of . If the gradient of a function is non-zero at a point , the direction of the gradient is the direction in which the function increases most quickly from , and the magnitude of the gradient is the rate of increase in that direction, the greatest absolute directional derivative. Further, a point where the gradient is the zero vector is known as a stationary point. The gradient thus plays a fundamental role in optimization theory, where it is used to maximize a function by gradient ascent. In coordinate-free terms, the gradient of a function may be defined by:

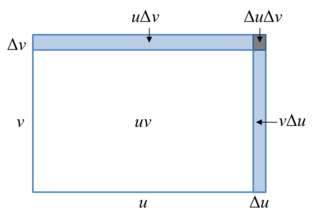

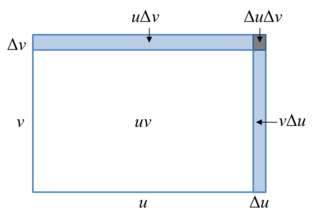

In calculus, the product rule is a formula used to find the derivatives of products of two or more functions. For two functions, it may be stated in Lagrange's notation as

In mathematics, a differential operator is an operator defined as a function of the differentiation operator. It is helpful, as a matter of notation first, to consider differentiation as an abstract operation that accepts a function and returns another function.

In differential geometry, a one-form on a differentiable manifold is a smooth section of the cotangent bundle. Equivalently, a one-form on a manifold is a smooth mapping of the total space of the tangent bundle of to whose restriction to each fibre is a linear functional on the tangent space. Symbolically,

In mathematics, the Legendre transformation, first introduced by Adrien-Marie Legendre in 1787 when studying the minimal surface problem, is an involutive transformation on real-valued functions that are convex on a real variable. Specifically, if a real-valued multivariable function is convex on one of its independent real variables, then the Legendre transform with respect to this variable is applicable to the function. In physical problems, it is used to convert functions of one quantity into functions of the conjugate quantity. In this way, it is commonly used in classical mechanics to derive the Hamiltonian formalism out of the Lagrangian formalism and in thermodynamics to derive the thermodynamic potentials, as well as in the solution of differential equations of several variables.

In multivariable calculus, the implicit function theorem is a tool that allows relations to be converted to functions of several real variables. It does so by representing the relation as the graph of a function. There may not be a single function whose graph can represent the entire relation, but there may be such a function on a restriction of the domain of the relation. The implicit function theorem gives a sufficient condition to ensure that there is such a function.

Multi-index notation is a mathematical notation that simplifies formulas used in multivariable calculus, partial differential equations and the theory of distributions, by generalising the concept of an integer index to an ordered tuple of indices.

In mathematics, differential refers to several related notions derived from the early days of calculus, put on a rigorous footing, such as infinitesimal differences and the derivatives of functions.

In mathematical analysis, and applications in geometry, applied mathematics, engineering, and natural sciences, a function of a real variable is a function whose domain is the real numbers , or a subset of that contains an interval of positive length. Most real functions that are considered and studied are differentiable in some interval. The most widely considered such functions are the real functions, which are the real-valued functions of a real variable, that is, the functions of a real variable whose codomain is the set of real numbers.

In mathematics, the derivative is a fundamental construction of differential calculus and admits many possible generalizations within the fields of mathematical analysis, combinatorics, algebra, geometry, etc.

In mathematics, the Gateaux differential or Gateaux derivative is a generalization of the concept of directional derivative in differential calculus. Named after René Gateaux, a French mathematician who died at age 25 in World War I, it is defined for functions between locally convex topological vector spaces such as Banach spaces. Like the Fréchet derivative on a Banach space, the Gateaux differential is often used to formalize the functional derivative commonly used in the calculus of variations and physics.

In mathematics, the Fréchet derivative is a derivative defined on normed spaces. Named after Maurice Fréchet, it is commonly used to generalize the derivative of a real-valued function of a single real variable to the case of a vector-valued function of multiple real variables, and to define the functional derivative used widely in the calculus of variations.

In differential calculus, there is no single uniform notation for differentiation. Instead, various notations for the derivative of a function or variable have been proposed by various mathematicians. The usefulness of each notation varies with the context, and it is sometimes advantageous to use more than one notation in a given context. The most common notations for differentiation are listed below.

This is a summary of differentiation rules, that is, rules for computing the derivative of a function in calculus.

In calculus, the differential represents the principal part of the change in a function with respect to changes in the independent variable. The differential is defined by

In mathematical analysis and its applications, a function of several real variables or real multivariate function is a function with more than one argument, with all arguments being real variables. This concept extends the idea of a function of a real variable to several variables. The "input" variables take real values, while the "output", also called the "value of the function", may be real or complex. However, the study of the complex-valued functions may be easily reduced to the study of the real-valued functions, by considering the real and imaginary parts of the complex function; therefore, unless explicitly specified, only real-valued functions will be considered in this article.

In mathematics, calculus on Euclidean space is a generalization of calculus of functions in one or several variables to calculus of functions on Euclidean space as well as a finite-dimensional real vector space. This calculus is also known as advanced calculus, especially in the United States. It is similar to multivariable calculus but is somewhat more sophisticated in that it uses linear algebra more extensively and covers some concepts from differential geometry such as differential forms and Stokes' formula in terms of differential forms. This extensive use of linear algebra also allows a natural generalization of multivariable calculus to calculus on Banach spaces or topological vector spaces.